The price of running Serverless functions keeps going down. This is not completely a surprise.

First, and in general, the basic technologies in the computer industry have been improving for years (See Moore’s law).

Second, cloud computing is a competitive market with lots of offerings; cloud vendors are in a race to offer the best prices/performance.

Third, and most important in the long term, cloud vendors can do things because of the scale at which they operate. For example, Amazon or Microsoft or Google can afford to develop their own processors tailored to run well for specific environments like Cloud instances (EC2) and Serverless functions.

If we take Amazon's recent improvements as an example, we have seen that:

- The price of Serverless functions is now computed with a precision of 1ms instead of 100ms. So, if your typical function was consuming 50ms, you now are only paying for these 50ms instead of a minimum of 100ms. Assuming, same pricing per ms, your cost has been divided in half.

- Even more recently, Amazon has announced you can run Serverless functions on Amazon’s own processor, Graviton.

According to Amazon, here is how one saves money:

- Functions run more efficiently due to the Graviton2 architecture.

- You pay less for the time that they run. Lambda functions powered by Graviton2 are designed to deliver up to 19 percent better performance at 20 percent lower cost.

The good news for Corticon.js users is that the same Serverless decision services can now run with either x86 or Arm/Graviton2 processors without implementation changes and without having to regenerate the decision services.

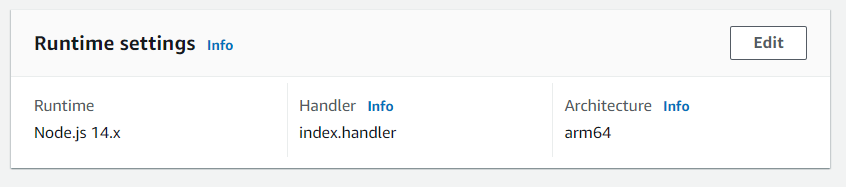

How to configure new and existing functions to run on x86 or Arm/Graviton2 processors

You simply adjust the runtime setting for the Serverless function. This can be a bit confusing, as you could search for this setting in the configuration tab and not find it. In the console, when you are on the main page of your Lambda function, simply scroll to the bottom until you see the Runtime Setting section like this:

Click Edit. From there, you can switch between the two architectures.

Of course, it helps that Corticon.js decision services do not have dependencies to CPU specific architectures, freeing you to switch between architecture very easily (no recompile or regeneration of the services code or no new bundling is needed).

Future

Well, it is hard to predict the future and as Neil Bohr said, “Prediction is very difficult, especially if it's about the future!” but that should not stop us from giving it some thoughts and speculating a bit.

One may wonder if Wright’s law can be applied to Serverless functions and EC2 instances.

Note: Here is a good reading on Wright’s law and its differences with Moore’s law.

In short, this law states that for every cumulative doubling of units produced, costs will fall by a constant percentage.

Wright’s law has been working for many industries and has been deemed more reliable at predicting cost decline than Moore’s law. See here and here.

Even though this law was developed and observed in the manufacturing space, perhaps it also applies to Cloud computing. Have Amazon or Microsoft been able to decrease their price by a constant percentage for every doubling of instances or Serverless functions they “manufacture/instantiate”?

Or maybe instead of counting instances, we should count computing capacity (as the sum of TeraFlops in all available cloud machine instances?).

These are hard or impossible to assess from the outside, as we do not know the internal pricing as well as the computing capacity the providers are putting online. The closest proxy we may have is the revenue increased from cloud vendor.

For example, this shows a doubling every 2 to 3 years, going to every 3 to 4 years. And this shows a global revenue that has doubled in a year (or less). So, we still are in a pretty fast growing market.

All of that can assure us that we should expect the price of Serverless functions to keep going down, because the overall quantity of deployed cloud computing capabilities keeps increasing.

Additionally, it is conceivable that cloud vendors could optimize the stacks used to run Serverless functions and in particular for languages like Python and JavaScript given how popular they are.

Who knows, will we see a new CPU architecture dedicated to these languages and perhaps a new VM to run these interpreted languages faster and consume less memory? Certainly, the financial incentive and the need to differentiate are there for the cloud vendors.

Conclusion

It is now cheaper to run Corticon.js Serverless decision services in AWS Lambda. You can immediately try existing deployed decision services. You do not need to make any changes to any of the services other than choosing which CPU architecture to run with as a runtime setting. Choice and simplicity are good.

Let us know if you are getting better performance or savings on your running costs. Hopefully, you are getting both 😊.

Thierry Ciot

Thierry Ciot is a Software Architect on the Corticon Business Rule Management System. Ciot has gained broad experience in the development of products ranging from development tools to production monitoring systems. He is now focusing on bringing Business Rule Management to Javascript and in particular to the serverless world where Corticon will shine. He holds two patents in the memory management space.