Data Science Workflow: From Research Experiments to Business Use-Cases

A Fast, Efficient, Reusable and Scalable approach to apply Data Science and Machine Learning to a problem statement of interest ranging from experiments to production.

With the advent of Data Science and Machine Learning, most industries have realized how beneficial it is to harness the power of data. This extends not just to Manufacturing, Health Care, and Automobiles but also to every single business differing only in scale. Having said that, no matter which business it applies to, the goal is to map the data to valuable insights and in turn to dollars. So, in simple terms:

"Data Science is a systematic study of structure and behavior of the data to deduce valuable and actionable insights"

The application of Data Science to any business always starts with experiments. These experiments undergo several iterations and are finally made ready for production. Be it an experimental phase or a production phase, the process involves a simple sequence of steps which the data under study is put through. The sequence may be simple, but the complexity of the underlying steps inside may vary. We call this Data Science Workflow.

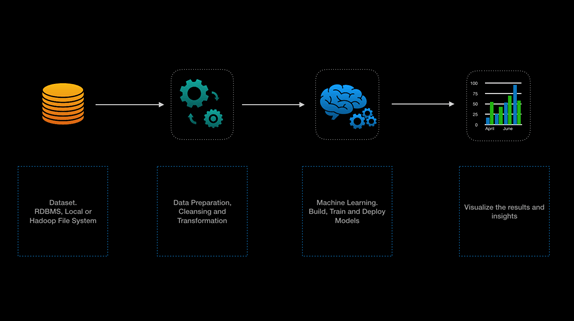

The following is a simple example of a Data Science Process Workflow:

Starting from the Data Capture and Storage until Reporting the Insights, there are a wide variety of tools or frameworks that can be used. This seems to be fine during the initial phase of experimentation but gets cumbersome when trying to productionize as it involves certain development practices. Many data scientists invest several weeks/months setting up development environment(s) itself. Additionally, they need to install and import the dependencies, manage broken data pipelines, deserialize and serialize data arrays for several algorithms, tune hyper-parameters manually, maintain the meta-data store and data store for both successful & unsuccessful experiments, manage cloud infrastructure and scalability, and finally build a separate setup for the "Production-ready" solution.

The unfortunate part is that the above steps need to be repeated for each new use-case without certainty of success. Typically a Data Scientist, who has what has been referred to as the sexiest job of the 21st century by Harvard Business Review, indeed has a tough time trying out and using the arrays of tools and adopting development practices where a certain portion of it is not of their actual concern.

Understanding the situation, we at Progress have compiled a "simple-yet-scalable" approach to ease the job of both a Data Scientist who would want to stick to their techniques and algorithms, and a Business Analyst/User who would like to apply the actionable insights to the business.

What is the Approach?

Since the Workflow is a sequence of steps in a defined order, it can be conceptualized as a Directed Graph, where the Nodes constitute the steps in the flow, and Edges denote the flow of information. The drag-and-drop based Graphical User Interface is the icing on the cake. In this approach, the spotlight remains on the design, while the execution complexity is abstracted at various levels from the user.

For simplicity, let's classify the steps involved in a typical Workflow as below:

- Data Acquisition

- Data Preparation

- Discovery and Machine Learning

- Report Results and Insights

Data Acquisition

The data of interest might have to be read from disparate data sources. For example, RDBMS, Local File System, or Hadoop Distributed File System to name a few. The format of the underlying data may also vary from CSVs, Parquet, ORC etc. In order to keep the Workflow agnostic of where the data is located and in what format, abstract this entity as a Dataset wherein, based on the location and format of the data, respective Readers will act and bring the data to the execution flow. This can be represented as a Node in the Graph.

Data Preparation

Once the data is read, we need to cleanse and prepare the data to be used for analysis. This involves certain actions to be performed on the data like Joins, Unions, Filter, Transformations etc. Represent these actions as respective Nodes.

Discovery and Machine Learning

This, being a decision-making step, involves applying a rich set of Machine Learning techniques and algorithms. During the experimental phase, there is a need for an REPL Notebook to code and view results.

Note: REPL stands for Read-Eval-Print-Loop, which is a programming environment and provides an interactive coding console. It is now one of the most widely used and preferred method for experimental analysis. Zeppelin and Jupyter are the prominent Notebooks on this paradigm.

Before the algorithms are ready for production, they undergo number of iterations for fine-tuning and refactoring. A Node to represent the REPL Notebook will bring in the coding console to the Graph.

Once the code is production-ready, the same can be templatized. The algorithm templates can be re-used on a different input with similar characteristics. These templates, when named accordingly and defined with respective inputs and outputs, can be another set of Nodes for the Graph. The executors abstract the execution environment (Spark, Dask, TensorFlow) agnostic of what programming language (Java, Scala, Python, and so on) the algorithm is coded with.

Report Results and Insights

Consume the results and insights with rich set of charts and tables. Templates for individual charts and tables or a complex dashboard of choice form the Nodes for visualization.

This does not end here. Once the Workflow is ready to solve a business problem, and if it is found generic enough, the Workflow itself can be templatized and applied on Datasets of similar verticals or be nested in other Workflow graphs as Nodes. It can be extended further to a more granular level to gain full control on ease of use without compromising on performance.

Conclusion

Considering the situation of Data Scientists, DevOps Engineers, Business Analysts and Business Users in the world of data driven decision-making business, there is a huge need for this kind of approach. The experiments on the data, creating necessary execution environments, performance tuning, feedback on results and insights keep them tied together and this makes their lives easy.

To make best use of any software or an application, the design should be as pluggable and extendable as possible. This pluggable and extendable design is proven to make this approach Fast, Efficient, Re-usable and Scalable. Feel free to contact us to learn more about how you can try out this "life-made-easy" approach.