Minimizing Planned Downtime for OpenEdge Applications

Large periodic maintenance items create havoc with users trying to access the system when it is down. There are ways to minimize the downtime for most maintenance items, this blog will explain it in more depth.

If you’re planning an OpenEdge upgrade, move to the cloud, platform migration, dump and load, or any other major project that has the database down for hours on end, it’s important to start with a few things. First, it is important to understand what requires downtime and how long that outage will last. If the outage duration exceeds the acceptable downtime period, then you need to start to get creative to shorten the downtime window.

Let’s assume the project requires a dump and load of the database. This process is commonly required when changing platforms or when defragmenting the database to make it run more efficiently. There are many ways to perform the dump and load and many ways to optimize it for speed to limit the downtime. In a benchmark exercise, I took a customer’s database and used all the tools available to OpenEdge to test dump and load timings. Below are some metrics for the database and the results.

Database statistics

| Database size | 36 GB | ||||||||||||||||||

| # of tables | 835 | ||||||||||||||||||

| # of indexes | 1,736 | ||||||||||||||||||

| # of storage areas | 49 Data, 49 Index | ||||||||||||||||||

Largest tables |

|

Dump and load methods benchmarked

- Dictionary dump and load

- Dictionary dump and bulk load

- Buffer copy from one database to another

- Binary dump and load

The Results

| Process | Time |

| Dictionary dump and load | 8 hours, 20 minutes |

| Dictionary dump and bulk load | 4 hours, 3 minutes |

| Buffer copy between databases | 2 hours, 7 minutes |

| Binary dump and load | 1 hour, 7 minutes |

You can see that the method chosen for doing the dump and load can have dramatic impact on the downtime to do the work. However, this database is 36 GB, which is pretty small by modern day standards. What if the database was 100 GB or 500 GB? Now we are looking at downtimes that are 80 hours for the slowest method and 10 hours for the fastest methods. Keep in mind, this is just dump and load times which is just one part of a large project. It does not account for backups, verification and encountering any problems. Very quickly this downtime can escalate to many hours or days even with this relatively small 36 GB database.

If your business cannot tolerate the downtime required to perform the dump and load, the most likely result is the work does not get done. The can gets kicked down the road, often times hoping the dump and load need goes away. Eventually, it will catch up with you, likely at the worst time possible. It might be time to consider some outside-the-box approaches to the database dump and load.

Progress has a product called Pro2 that provides replication to MSSQL, Oracle, or OpenEdge. The Progress Professional Services team have used this tool numerous times to perform a dump and load to another OpenEdge database with minimal downtime, regardless of database size.

How Pro2 Works

There are two components to the Pro2 product being Change Data Capture (CDC) and Replication. The Change Data Capture piece uses Replication Triggers or Native CDC (11.7+) to track all the updates, creates, and deletes done on the database. All these changes are recorded in a table called the ReplQueue. The ReplQueue does not copy the record, but rather just notes the record that changed and what the change was (create, update, or delete).

The Replication code looks at the ReplQueue and processes the changes, bringing the current record to the target database. This is a constant process that provides for real-time replication. The join between the source record and the target record is the ROWID of the source record. The target table has an additional field called prrowid that stores the source ROWID and there is a unique index on this field.

The initial seed of the target database is done by a bulk load process. This is where each table is read front to back and the records are copied to the target database.

How Pro2 Helps to Minimize Dump and Load Downtime

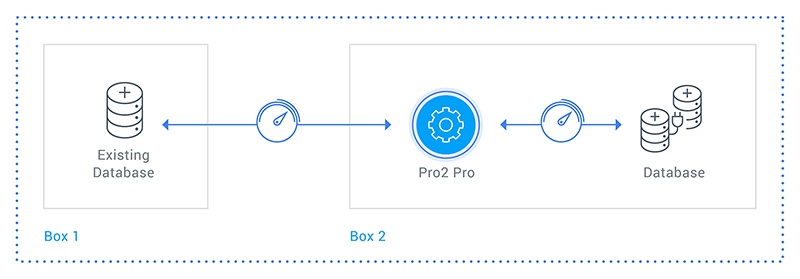

This picture shows how Pro2 can work to create a freshly dumped and loaded database that is the target of Pro2 replication.

Box 1 is where the current production database is running with users connected. Box 2 is where a new database is receiving updates from the production database. The dump and load process is accomplished by the bulk load process described above.

The effort to replace the database in box 1 with the new database in box 2 is:

- Make sure the replqueue is empty on box 1

- Shutdown the database on box 1

- Remove the prrowid field and index from the database in box 2

- Backup the database on box 2

- Restore the database to box 1

- Startup the database on box 1

For most databases, regardless of the database size, the downtime will be less than two hours, often times less than one hour.

Dealing With Existing Pro2 Customers

Pro2 customers have an extra challenge. Not only do they need to take the downtime for the dump and load on their production database, they also need to take downtime on the target database for the bulk load process, since all the ROWIDs changed on the source database.

When Pro2 is installed, the bulk load process tends to take between three to five days to complete. This is not a big deal at the time as there are no business processes that depend on the target database. Fast forward a while and very quickly that target database becomes as critical as the source production database, if not more critical. A three- to five-day outage to build out the Pro2 target for most customers is unacceptable.

Pro2 to the Rescue (Again)

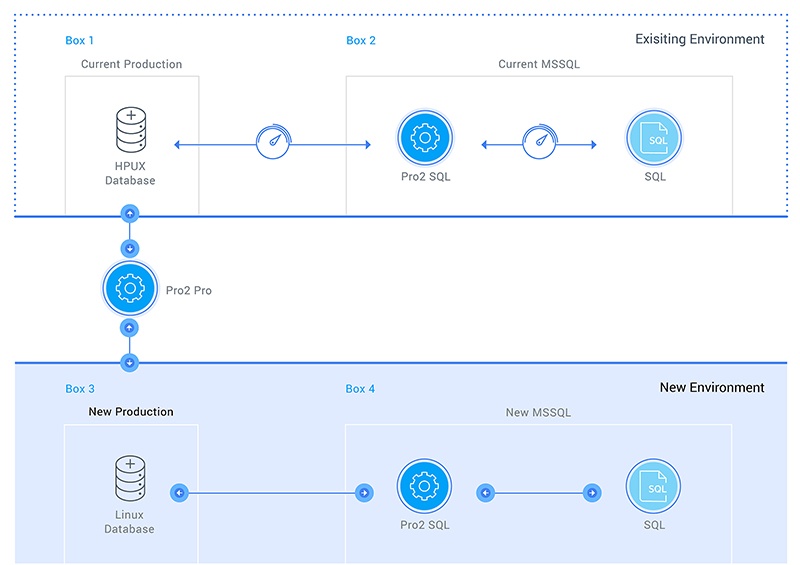

For these customers, we can again use Pro2 to make a second path, first to another OpenEdge database as described above, and then a path to the target database. The picture below shows this. It is assuming a platform migration from HPUX to Linux and a Pro2 target that is MSSQL.

In the picture above, box 1 and box 2 exists. We build the path to box 3, which is the dump and loaded database similar the database in Figure 1. Then we build out the path from box 3 to box 4. The trick to make this work with Pro2SQL is to enable second pass replication where the second pass replicates to the OpenEdge database in box 3. Once the bulk load is completed for box 3, then the path from box 3 to box 4 is created and bulk loaded.

The go-live process is to migrate to box 3 and box 4. the downtime required for the cutover is the time is takes to remove the prrowdid field and index for the database in box 3. the typical downtime is less than one hour.

Conclusion

It is important to minimize the downtime needed for a project. Often, downtime windows are small and if a dump and load is required, it’s time to get creative. Parallel processing the dump and load process can help, but it may not be enough. Progress Services has been very successful helping customers limit their downtime to a couple of hours or less, using tooling created specifically for this purpose.

If you want to learn more about OpenEdge Services, contact us.