A No-Code/Low-Code Serverless Solution for Processing Stream Data

Stream processing is a mechanism to process very large amount of data in real time. For example, imagine a car insurance company that wants to charge their customer per distance driven on a daily basis, you would have to process lots of location data from an enormous fleet of vehicles.

Or imagine a country that wants to setup a COVID-19 diagnostic system based on real-time reported symptoms.

This kind of processing has become standardized and very accessible thanks to technology stacks like Kafka as well as serverless offerings from cloud vendors.

For example, Amazon Kinesis is a scalable and durable real-time data streaming service. For more info see Amazon Kinesis Data Streams.

Corticon.js is a no-code/low-code and cloud-native rule engine that allows business users to author decision services that can be deployed as serverless functions. Learn more about Corticon.js here.

In this blog, we will see how combining these two technologies provide you with great benefits. We will use, as an example, a decision service that processes healthcare patient records to determine both the probability of someone having COVID-19 as well as the patient risk level and based on these results, notify, in real time a healthcare provider.

Why Would You Use These Two Technologies?

There are a couple of reasons, among others:

- The rate of incoming data is large and is variable. There will be bursts when lots of patient records come in and at other times the rate will slow down. The Kinesis stream will take care of receiving the records and making them available for decision processing. If lots of data comes in and the backend cannot process them immediately, the stream will store the records automatically for you.

- The processing of the data is decoupled from writing the data to the stream (also called data ingestion).

- Most importantly, you want to empower business specialists to author and maintain the rules on how to process the healthcare records because the project needs to be ready “yesterday” and your backlog is already more than full.

By using a rule based no-code serverless decision service like Corticon.js, you gain productivity because the processor of the records (the stream consumer in Kinesis parlance) is authored directly by the business specialist. - And as importantly, you will gain agility as the same business user will be able to adjust the rules very quickly as the business evolves without the costly and time-consuming round trips that are typical between business users and programmers.

You are freeing your precious programming resources to focus on the security and scalability of the solution. - But it does not stop there, you gain even more agility because the business user does not need to be trained in any of the cloud technologies. So you can get started faster and without additional costs. We will see in one of the next sections below how this works.

Who Is This Blog For?

This blog is for architects and cloud application designers as it shows a pattern that can be used to improve on current or future cloud architecture and design. It is also useful for the integrators of such systems as we will get an understanding on how a no-code decision service can be integrated into the stream processing.

In the next sections, we will see how simple it is to create a data stream as well as configure a serverless decision service from the rules.

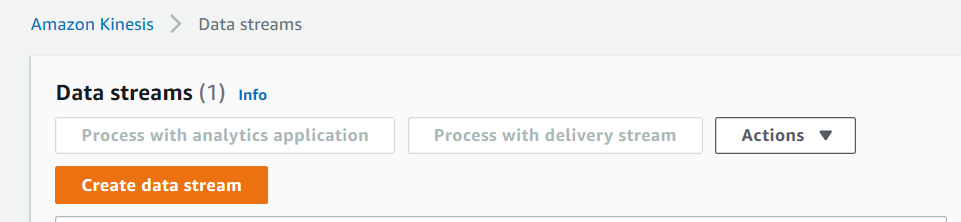

Creating the Kinesis Stream

This is a pretty straightforward task from the AWS console.

You select Kinesis from the list of services and click the create button.

You will get to the following form:

This is where you enter a name and number of shards you want to configure. We won’t get into the details of shards here, but essentially this is where you will configure the max throughput possible. The number of shards can be adjusted post creation step when your traffic pattern changes. For getting started, simply configure one shard.

Processing the Data on the Consumer Side

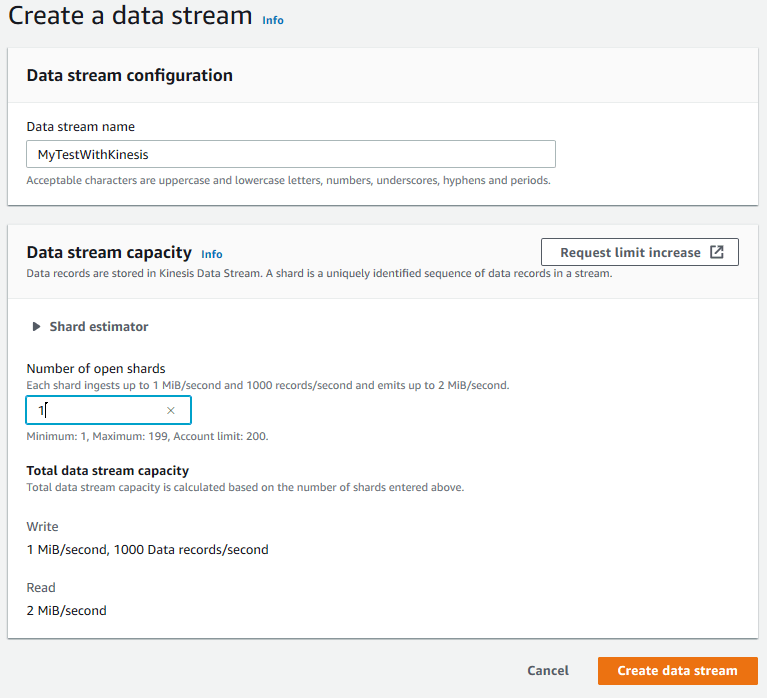

A healthcare specialist has written a set of rules. The first set computes the probability the patient has COVID-19 based on current knowledge while the second set determines if the patient needs immediate medical attention based on the probability, age and other criteria.

This is illustrated with the following Corticon.js rule flow.

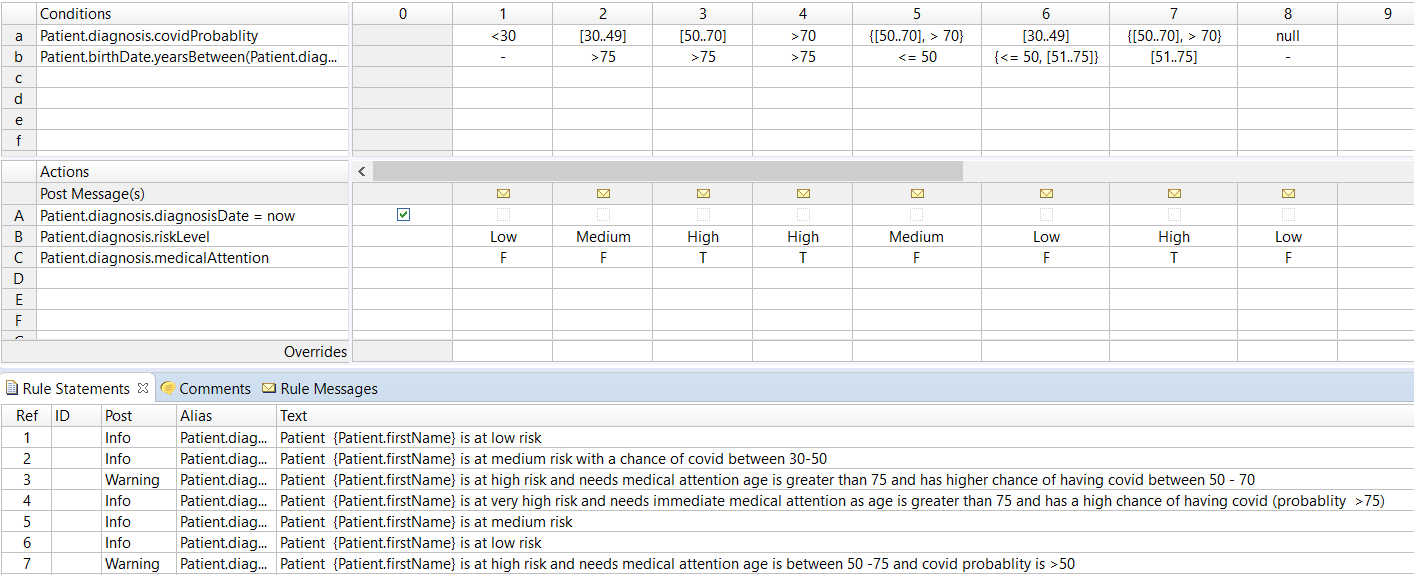

And here is the ComputeDiagnosis rulesheet:

Notice how the healthcare specialist simply works in a spreadsheet like interface to:

- Assess various conditions (top panel)

- Act based on these conditions (middle panel)

Working with a spreadsheet interface allows most business user to express simple to very complex rules without having to write a single line of code.

Additionally, you will notice in the third panel (bottom one), a set of rule statements documenting the business logic.

These rule statements will be generated at runtime and are typically used for auditing or tracing purposes. In our example, we also use them as content when we generate the notification to the care provider.

These rules can get quite complicated and would be hard to program correctly and quickly if they had to be coded in a regular programming language. It’s simpler, more reliable and faster to let the healthcare specialist author the rules, maintain and test them. This provides a good division of labor, the integrator can concentrate on securing the solution and scaling it without having to worry about the implementation and testing of the rules themselves.

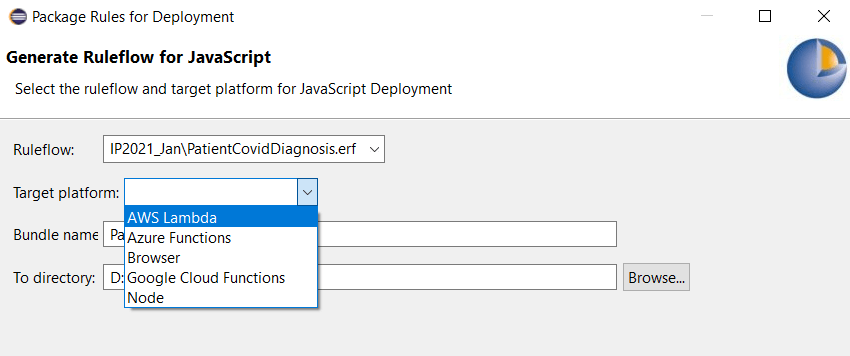

Now we are ready to deploy the rules as an AWS Serverless Lambda function with a single click as shown below:

As you can notice, the same decision service can be bundled for deployment in Azure or Google cloud as serverless functions. And here, you see one additional benefit in using Corticon.js: the rules authors do not need to know anything about cloud technologies and be trained on them, they write the rules independently of where these rules will execute.

Thus you gain even more productivity. You can refer to this blog https://www.progress.com/blogs/no-code-decision-services-for-serverless-mobile-and-browser-apps for additional information and in particular to see how decision services can also be run directly in the frontend (browser or mobile app) to provide a more responsive UI.

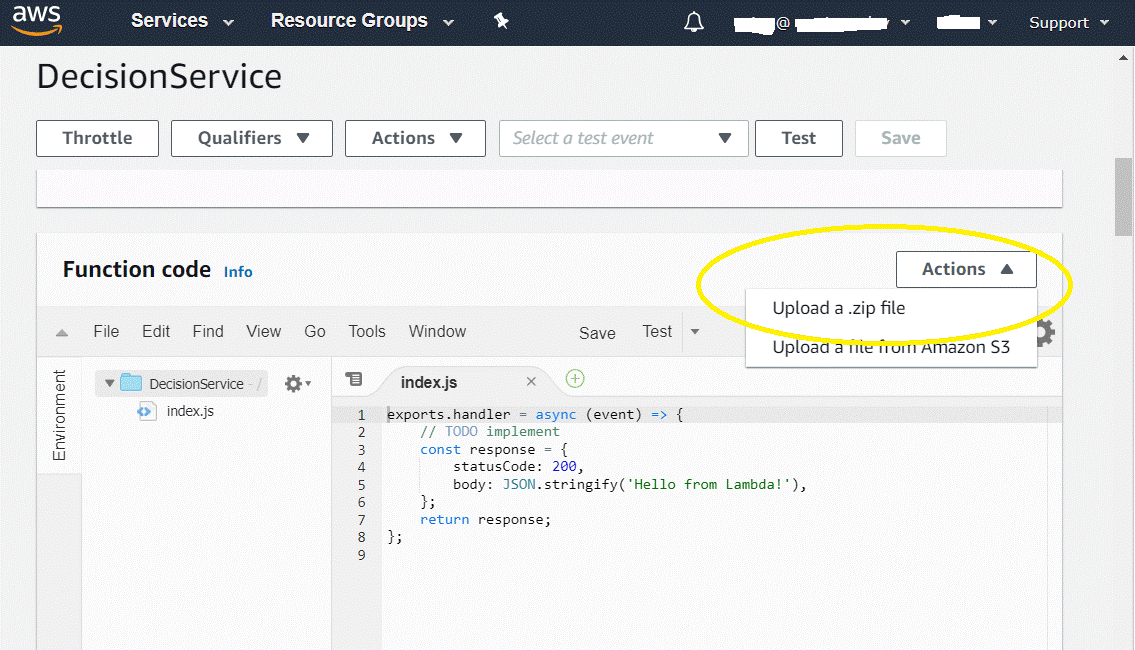

You create the AWS Lambda function and upload the generated zip file as shown in screen below.

Now you have a serverless decision service ready to scale and handle huge load in the data stream. The next step consists in configuring how Kinesis will call this decision service:

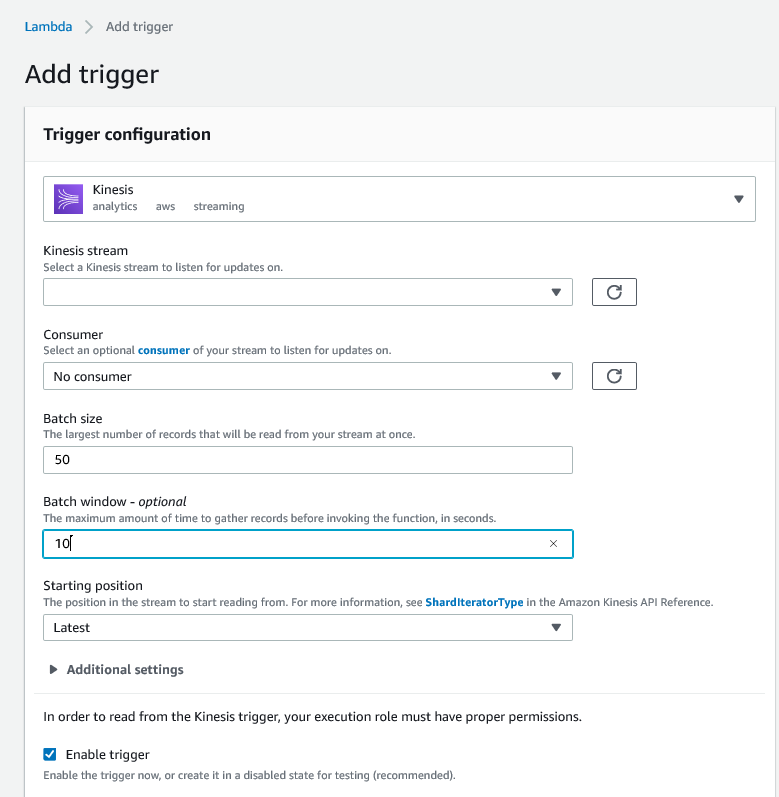

We click Add Trigger in the Lambda function console and select “Kinesis analytics aws streaming,” and select the name of stream we want to consume data from. We click save and that’s it—our decision is ready.

In just a few clicks, we have created a highly scalable solution to process the records.

Understanding the Scalability of the Solution—How Fast the Decision Service Will Process Records

The decision service will be called with one or more records at a time. You have control on that process with the following two interesting parameters. The “Batch Size” and the “Batch Window.”

The “Batch Size” specifies the maximum number of records we want to process at a time. For example, in the screen below I specify 50. When a lot of data is produced at a time, my decision service won’t be called for each record but as soon as 50 records have accumulated in the shard.

Now, when few records at pushed in the stream, we may not want to wait for reaching the threshold of 50 records. That’s where the “Batch Window” comes into play. Essentially, I can specify, like in the example below, that when 10 seconds have elapsed, and less than 50 records have been received call the decision service anyway with the current batch of records.

There is one additional case to look into. Say that producers put 50 records in the stream in 100ms but my decision service takes 280ms to process them. Data records will accumulate faster than the processing function can handle.

This may not be an issue in all cases, you may be OK in having the stream accumulate the data and having the function catch up when there is less traffic.

But if you want to process all records in real time, you have two options here:

- Increase the memory allocated to the lambda function as you will get a faster runtime (roughly twice as fast when you multiply memory size by 2). So, in our case, we could increase the memory size from 128 to 512 and in average execute the decision service in 70ms (280/4).

- There is also an “advanced settings” in the Kinesis trigger UI called “Concurrent batches per shard.” This allows us to control how many decision services can run in parallel. Again, in our case, we could set it to 3.

Adjustment to the Serverless Function

As we just saw, Kinesis will call the decision service with a variable number of records. To improve the performance of the running service, we will group all the records we receive under one payload. This is done JavaScript code as shown below:

01.const payload = [];02.03.event.Records.forEach(function(record) {04. // Kinesis data is base64 encoded so decode before adding to the payload05. const oneRecord = Buffer.from(record.kinesis.data, 'base64').toString('ascii');06.07. payload.push(JSON.parse(oneRecord));08.});09.10.const body = { root: payload };Conclusion

As you could see, in a few minutes, as an integrator or an application architect, you can have a real-time stream created and connected to a set of decision services ready to process records at very large scale. By leveraging Corticon.js no-code serverless rules engine you can empower business specialists to author the decision services; freeing your development team to focus on the security and scalability of the solution.

Thierry Ciot

Thierry Ciot is a Software Architect on the Corticon Business Rule Management System. Ciot has gained broad experience in the development of products ranging from development tools to production monitoring systems. He is now focusing on bringing Business Rule Management to Javascript and in particular to the serverless world where Corticon will shine. He holds two patents in the memory management space.