Connect Microsoft Power BI to Snowflake via OData

Introduction

This guide will walk you through connecting Microsoft Power BI to a Snowflake DataSet using the DataDirect Hybrid Data Pipeline.

Microsoft Power BI enables rich data visualization of your company’s data through its suite of analytics tools, letting you easily analyze and share insights in any device. Here’s how to take this awesome BI tool and integrate it with Snowflake data to take it to the next level.

Note: This tutorial assumes that you already have a Snowflake account and database. It also assumes that you already have Microsoft Power BI Desktop already installed.

Download and Install Hybrid Data Pipeline (HDP)

- Download Progress Hybrid Data Pipeline by visiting this page.

- Install Progress Hybrid Data Pipeline by following the below tutorials. Note that these are in generally applicable to any CentOS/RHEL machine, each of them varies a bit on specific cloud service settings.

Enable JDBC feature

The first thing you need to do is open Postman and send a PUT request, to enable JDBC driver feature in Hybrid Data Pipeline. You need to authenticate using basic authentication using the “d2cadmin” user you have configured while installing Hybrid Data Pipeline.

PUT https://<server>/api/admin/configurations/5Body{ "id": 5, "description": "Enable JDBC DataStore, when value is set to true, JDBC DataStore will be enabled.", "value": "true"}

This will enable third party JDBC feature in Hybrid Data Pipeline.

Add the Snowflake JDBC driver to classpath

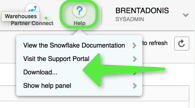

- Download the Snowflake JDBC driver click in the “Help” button on the top right of your Snowflake browser instance, and then select Download.

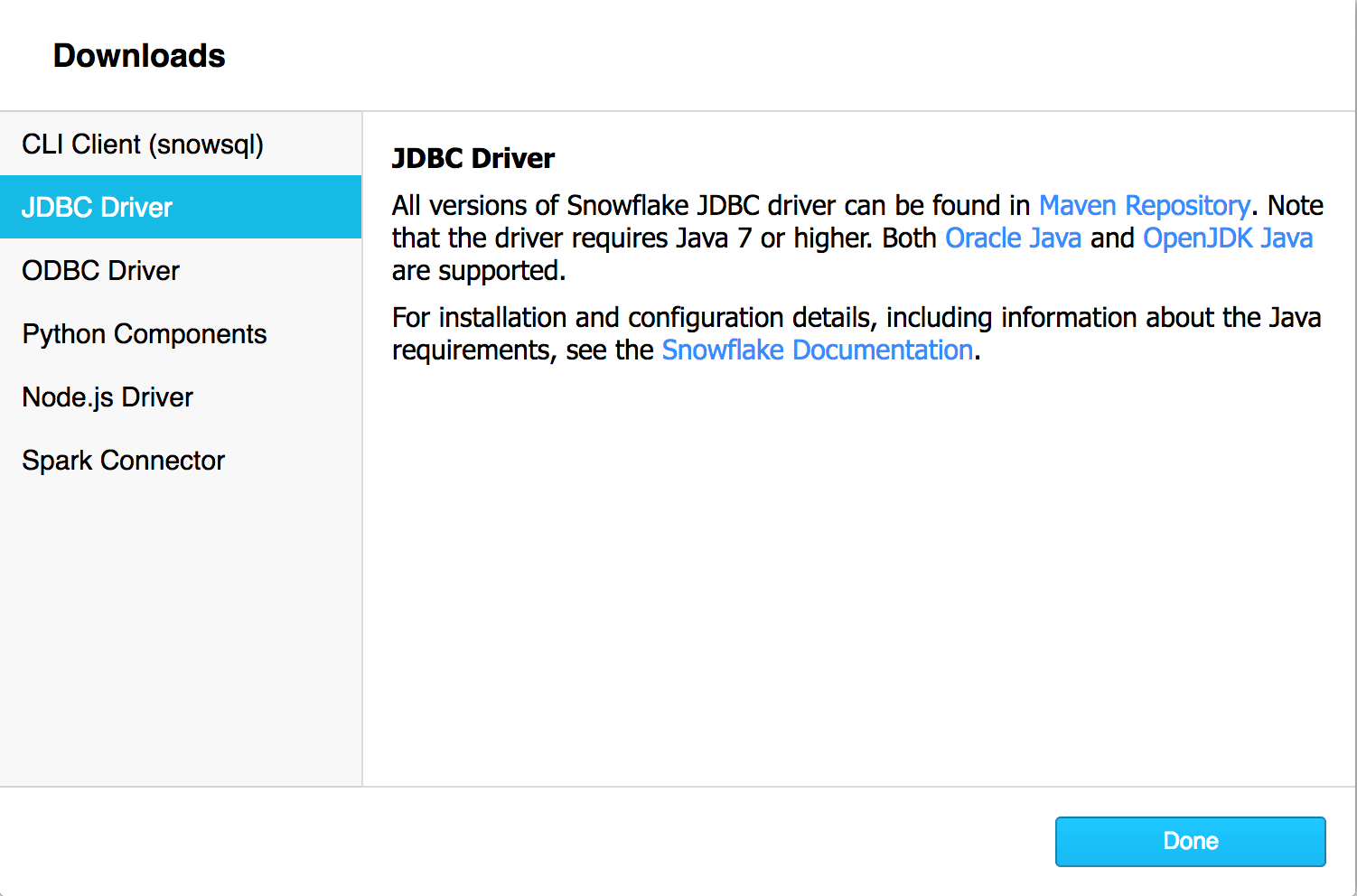

- Select the “JDBC Driver” options on the let of the screen and then select the “Maven Repository” and follow the links to download the latest JDBC driver for Snowflake. This tutorial used version 3.6.7.

- Copy the Snowflake JDBC driver to the following location in Hybrid Data Pipeline Server’s install location:

/opt/Progress/DataDirect/Hybrid_Data_Pipeline/Hybrid_Server/ddcloud/keystore/drivers

(note, this is the default installation folder. If you installed the software in a different folder, then locate the “ddcloud/keystore/drivers” folder of your installation.)

- Navigate to the path shown below on your Hybrid Data Pipeline Server and stop the HDP server, by running the following commands.

$ cd/opt/Progress/DataDirect/Hybrid_Data_Pipeline/Hybrid_Server/ddcloud/$ ./stop.sh

- Once the server has stopped, you can restart it again – this will allow the new driver added to be made available to the HDP Server.

$ ./start.shConfigure the Snowflake DataSource in Hybrid Data Pipeline

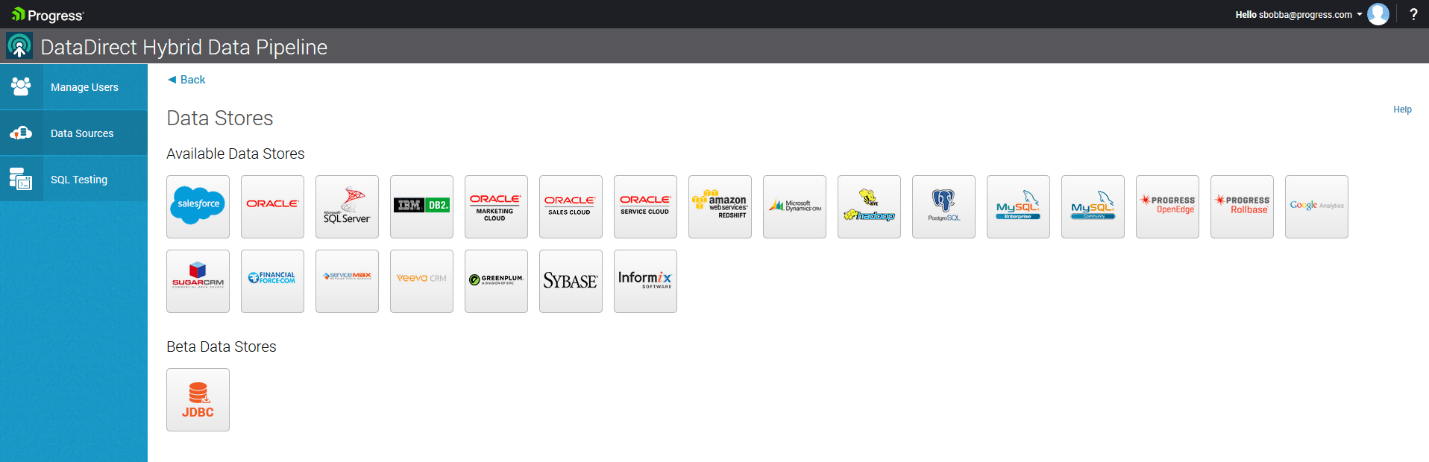

- Open your browser and go to http://<Server-Address>:8080 and log in to Hybrid Data Pipeline. Go to DataSources tab and create a New Data Source. Choose JDBC data store.

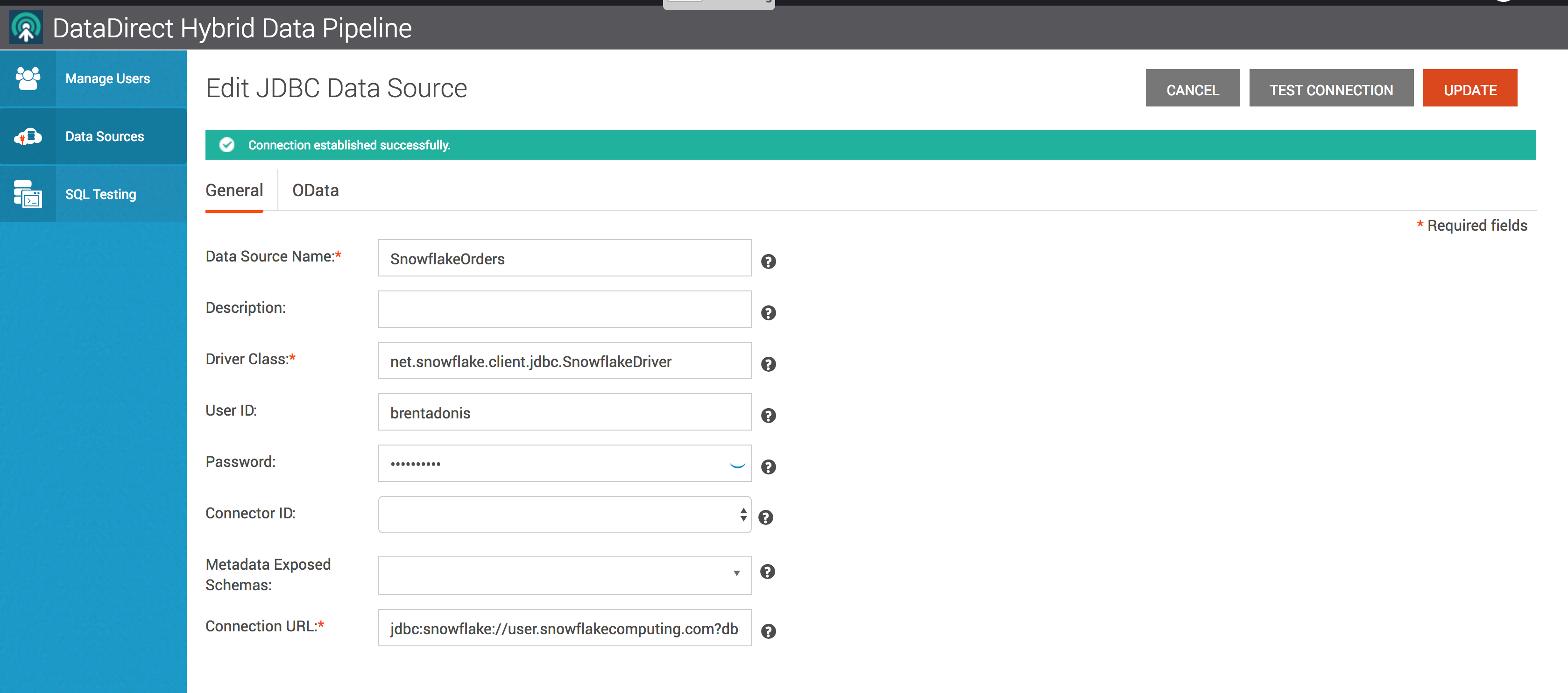

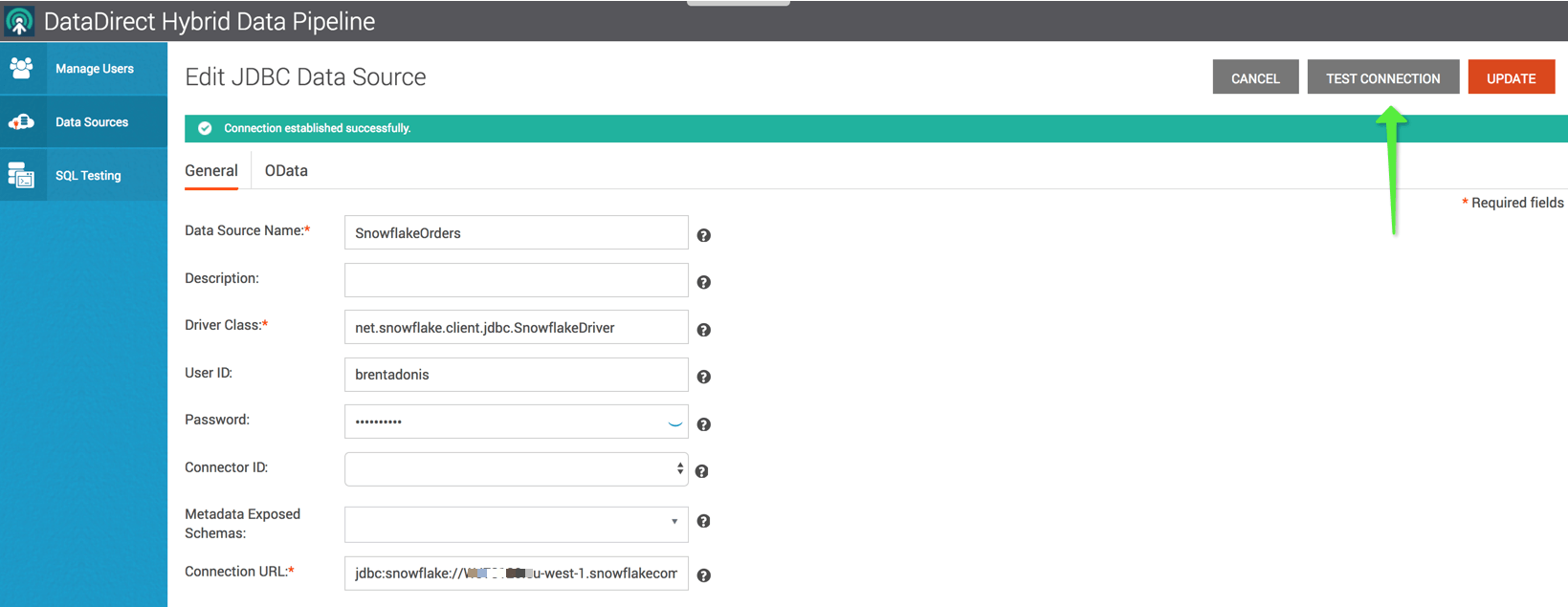

- Configure the Snowflake JDBC Data Source as shown below to connect to your Snowflake database.

- The Driver Class for Snowflake is:

Net.snowflake.client.jdbc.SnowflakeDriver

- The Connection URL should look similar to this:

jdbc:snowflake://SnowflakeUserID.snowflakecomputing.com?db=DEMO_DB

- Click on “Test Connection” and check if your configuration is correct.

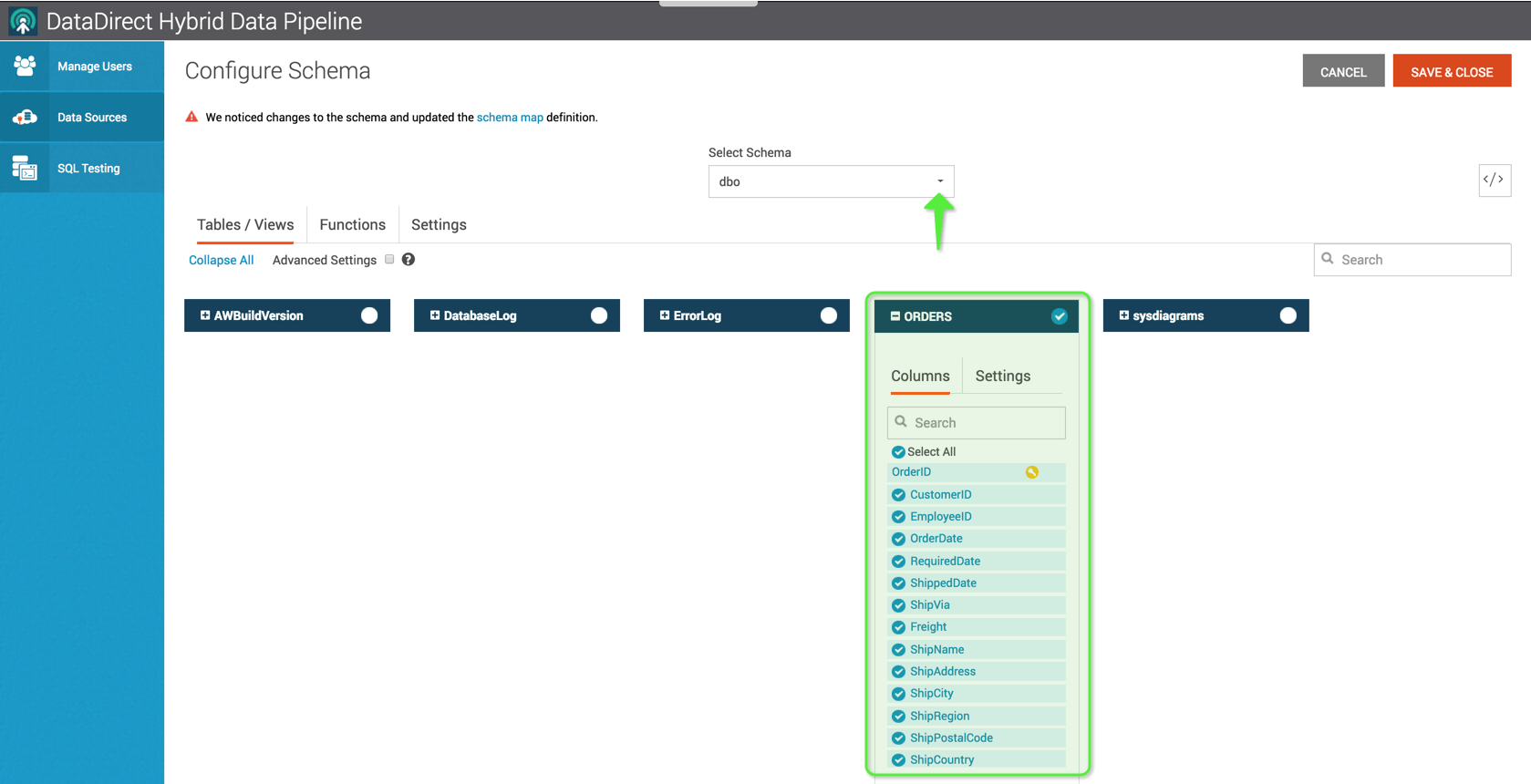

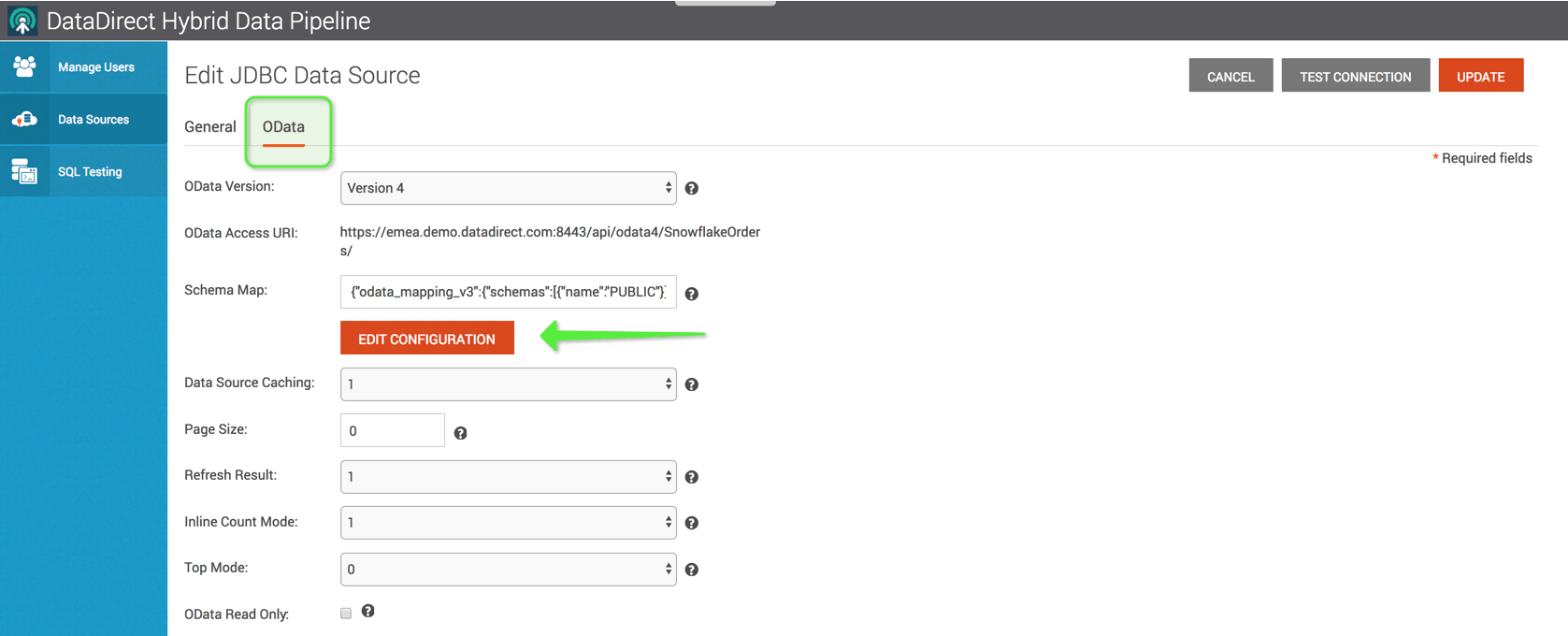

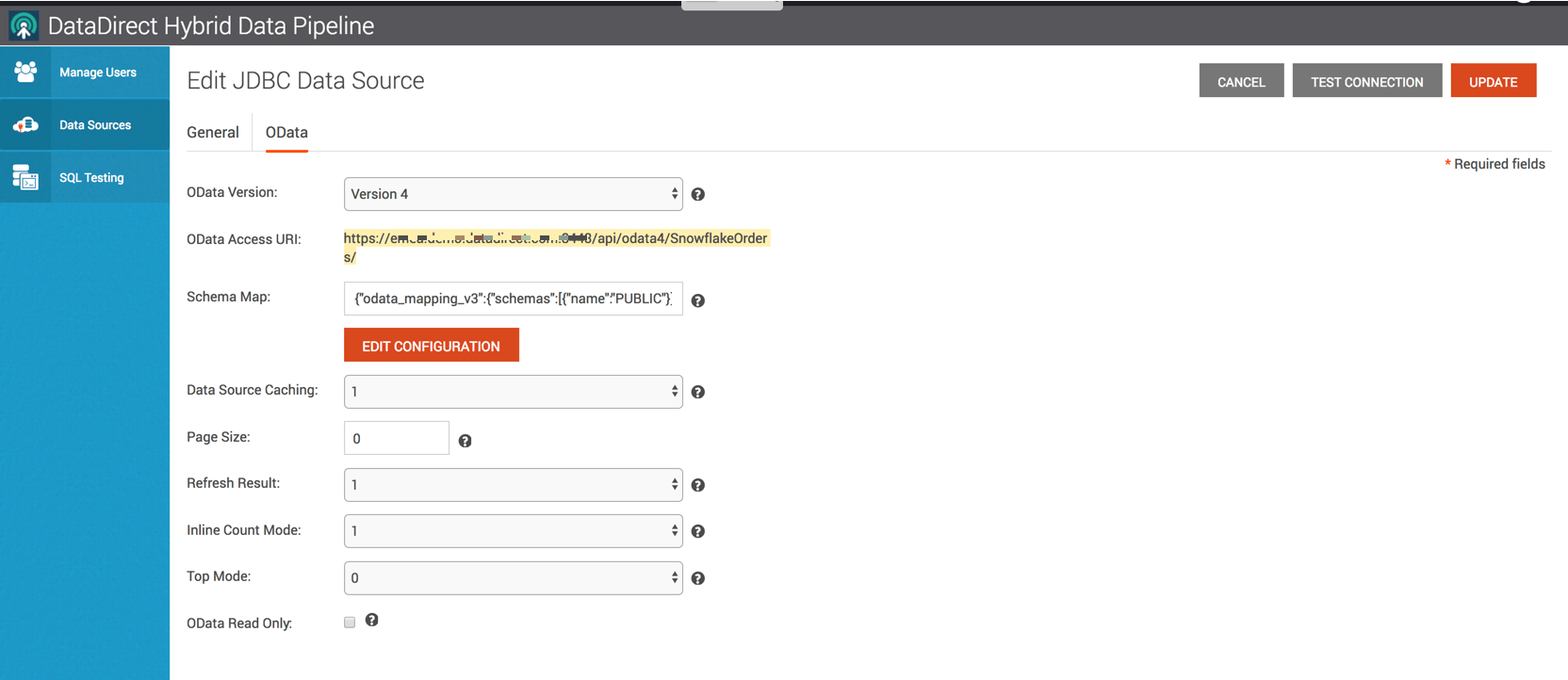

- Now select the “OData” tab to access the OData Configurator.

- Now, Metadata Exposed Schemas should be populated with all the schemas available in your database. Choose your schema.

Choose your schema and you should see list of tables as shown below. Select the tables you want to expose through OData REST API. I chose the “ORDERS” table in this case to expose through OData API.

- Click on Save and Close to save the settings.

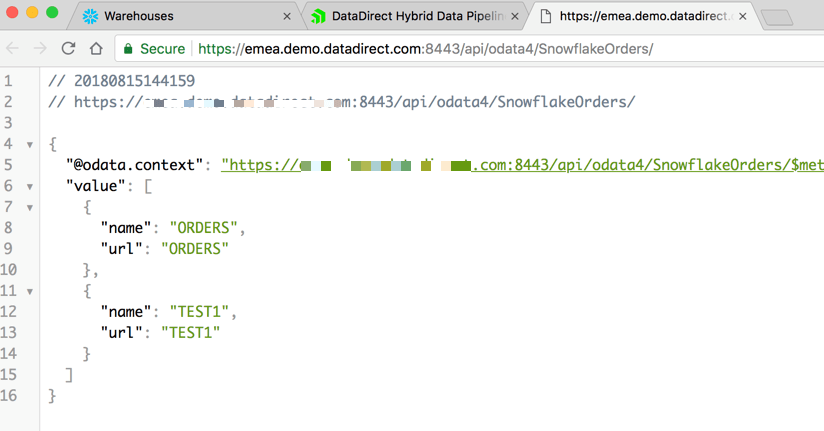

- Back on OData tab, you should find OData Access URI. Copy the URI to another tab and open it. As you might not have proper SSL certificate, you might see unsafe exception. To overcome it, change the URL to http://<Server>:8080/api/odata4/<yourdatasourcename>

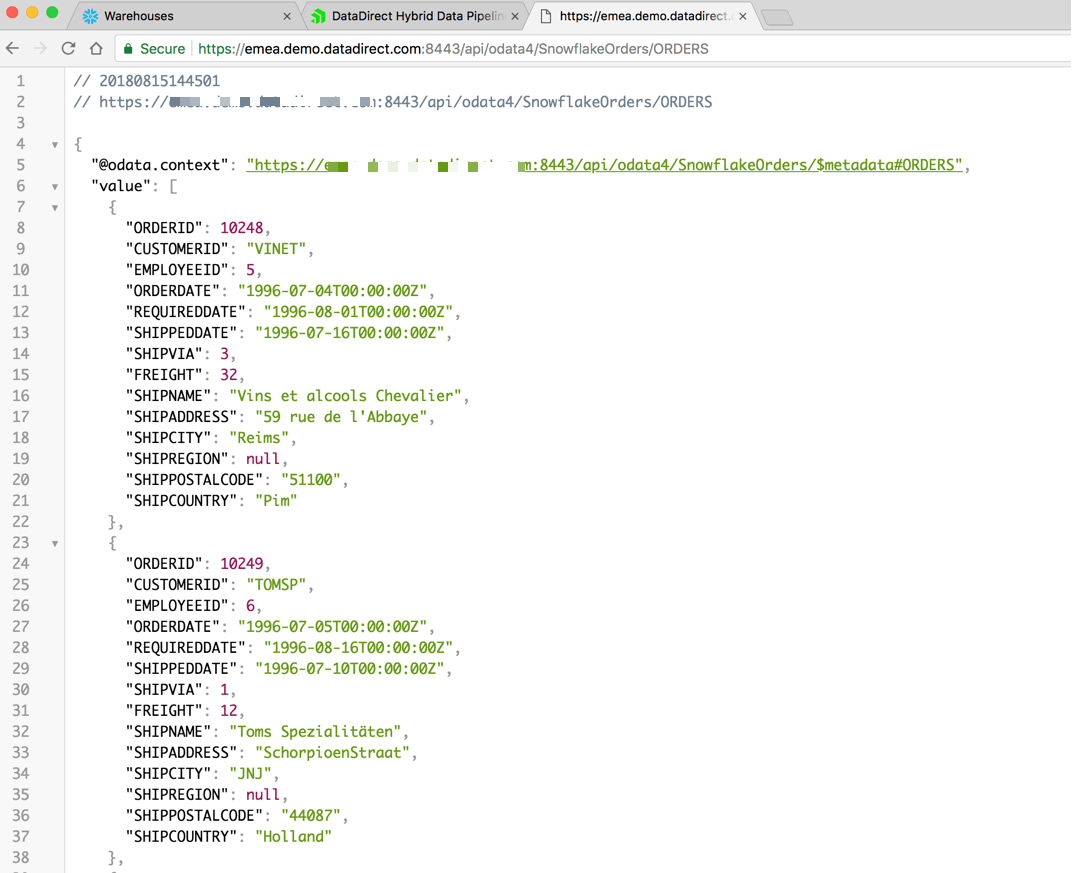

- You should now be prompted for your Hybrid Data Pipeline Credentials, after you enter it you should see the following response, which basically lists out all the tables that are exposed.

- To access the data in the ORDERS table, you can navigate to the URI: http://<server>:8080/api/odata4/SnowflakeOrders/ORDERS

Use the Snowflake OData URI in Microsoft PowerBI

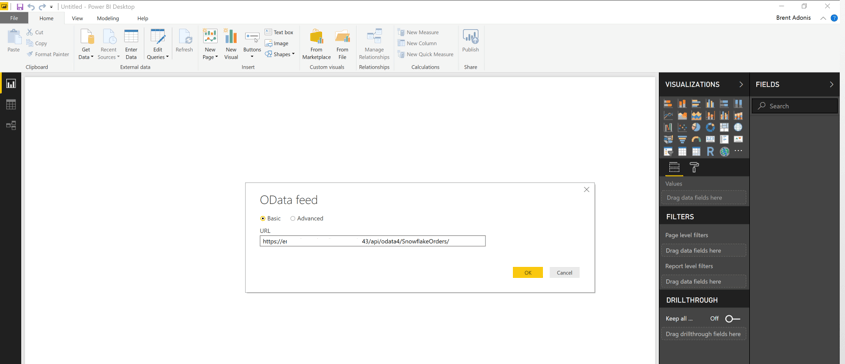

- Launch Microsoft Power BI and select New Report:

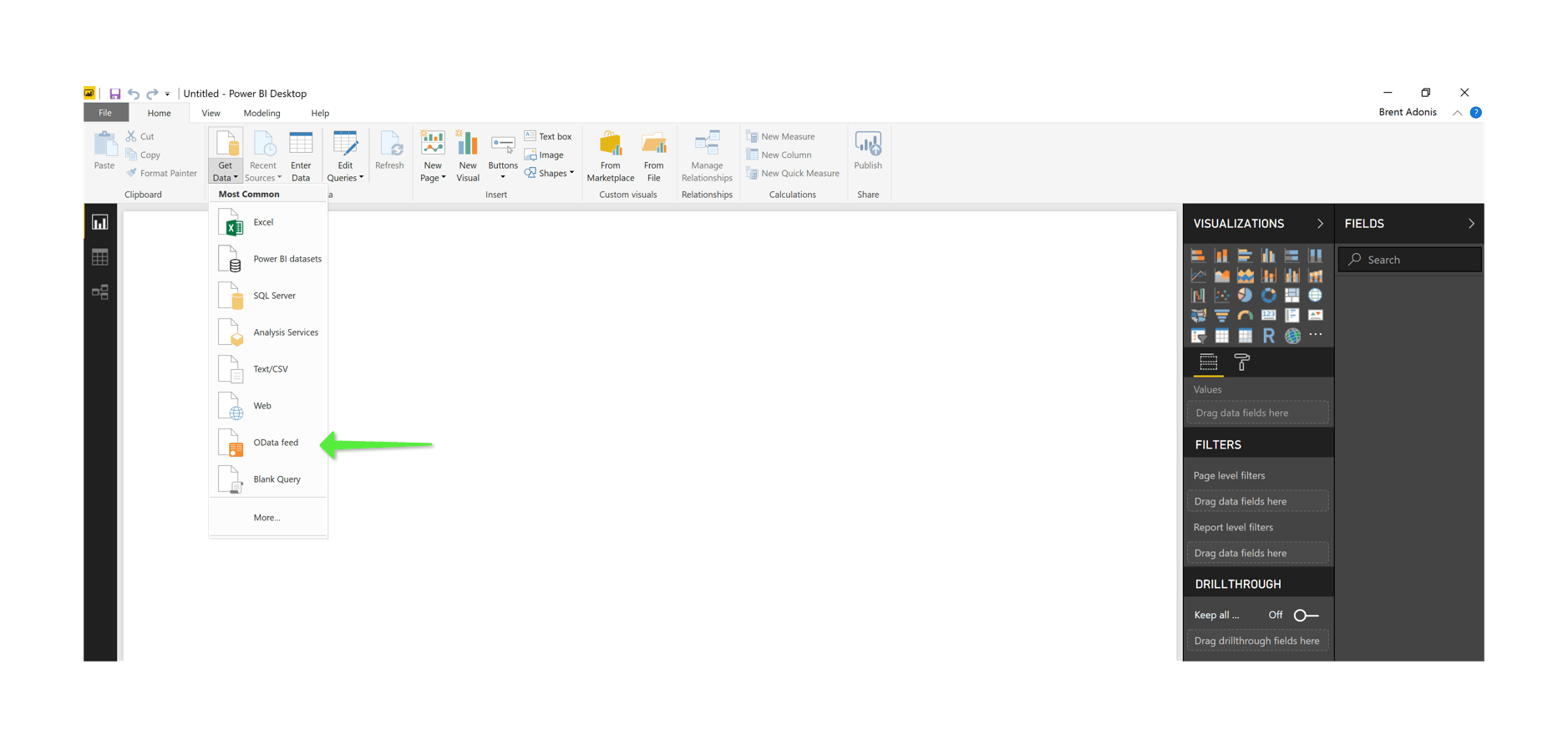

- Select ‘Get Data’ > OData Feed

- Enter the Hybrid Data Pipeline URI that you created.

You can copy this URI from the Hybrid Data Pipeline OData Configurator:

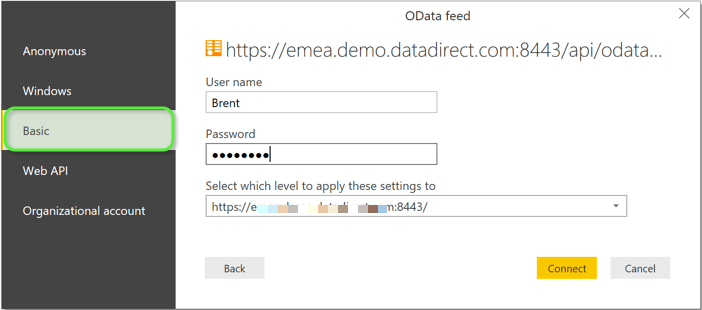

- Power BI will need your Hybrid Data Pipeline credentials to access the OData URI:

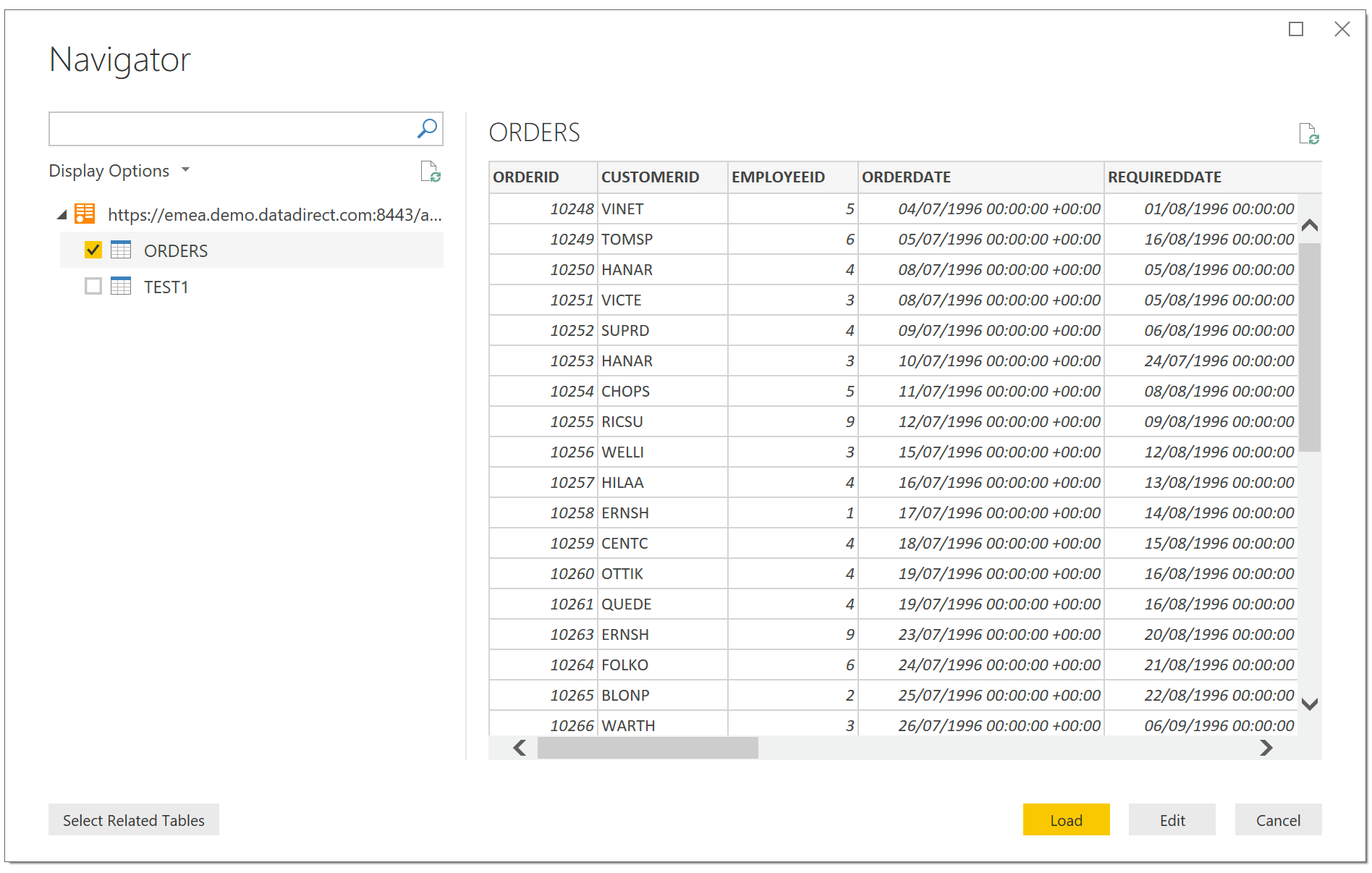

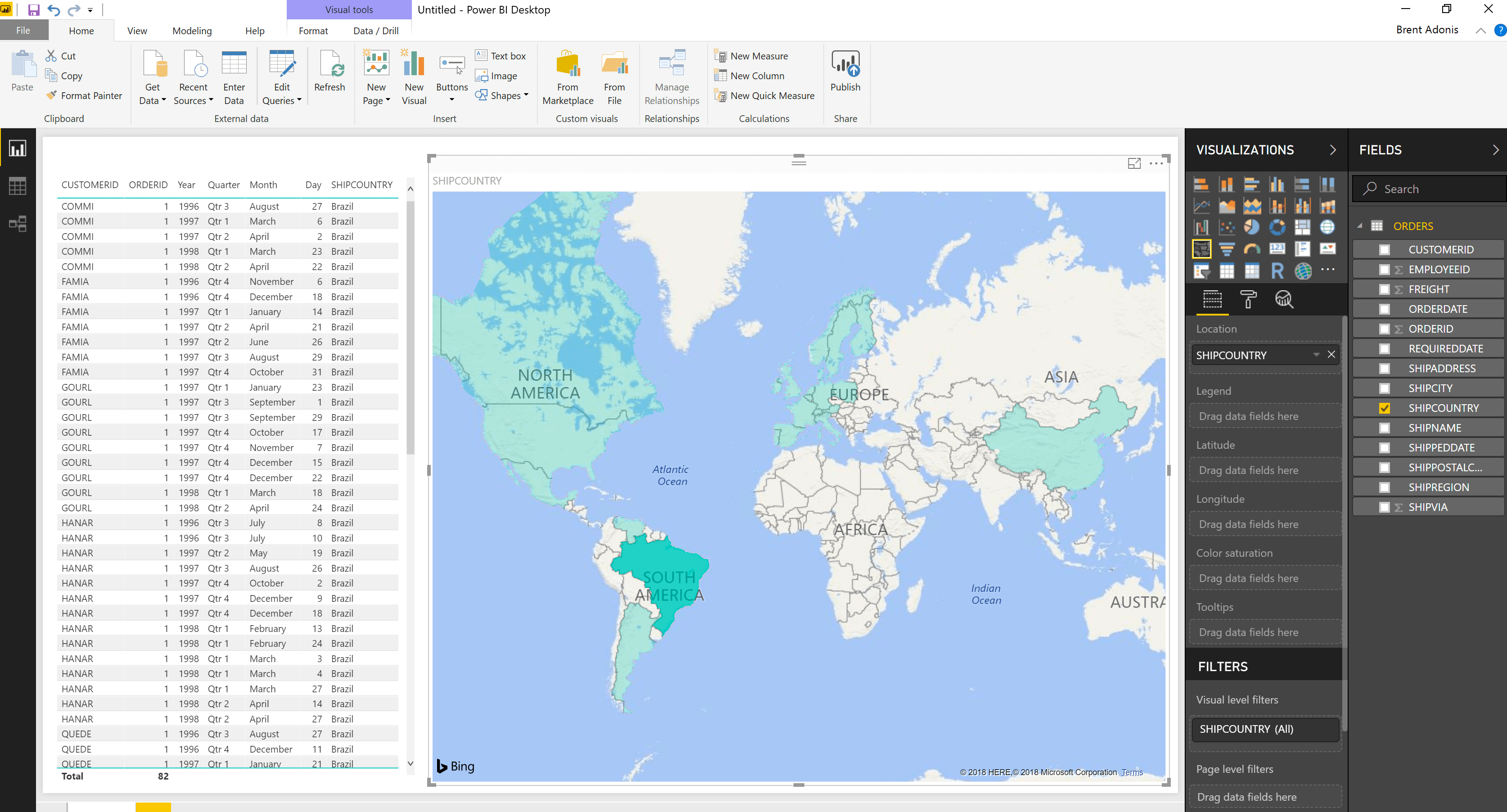

- On connecting, you will see a list of the Tables you made available via the Hybrid Data Pipeline OData Configurator. Select the Table(s) you want for this page. Power BI will give you a preview and then you can select the “Load” button to make the data from this table available to Power BI.

- This will bring up a blank canvas with the available objects on your right.

By selecting your desired Visualizations and adding columns to display in these visualizations you can create a simple, information screen like this:

You can use this same base OData URI with any other Visualization and analytical tools like Tableau, SAP Lumira, QlikView and even Microsoft Excel or for any of your custom uses. Feel free to try Progress Hybrid Data Pipeline and contact us if you have any questions or issues while using it.