Integrate your Enterprise or Internal API with Salesforce using Lightning Connect

Introduction

Do you have a custom Internal API that you use in your company and would like to access it in Salesforce via Salesforce Lightning Connect?

Do you have a custom API that you use in your company internally and would like to connect to your favorite analytics tool, or integrate with any other tool using standards based connectivity like JDBC?

Do you have a data source which has a REST API and doesn’t have a JDBC driver, but you would like to have one for your analytics or integration purposes?

If so, you're at the right place. This tutorial will get you started in how you can start integrating your Enterprise API’s with Salesforce via Salesforce Lightning connect and in the process also to helps you to use your API via Standard based data connectivity technologies like ODBC, JDBC and OData.

The overview for how we are going to achieve this is below.

Step 1:

Build a JDBC driver for your API using Progress OpenAccess SDK in 2 hours.

Progress OpenAccess SDK is a framework that lets you develop a ODBC and JDBC driver using a same code base easily, but fully featured and fully compliant with ODBC and JDBC Standards.

Step 2:

Use the JDBC driver you built using OpenAccess SDK in Progress Hybrid Data Pipeline to generate OData 2.0/4.0 API for your internal API in 2 mins.

Progress Hybrid Data Pipeline is our self-hostable hybrid connectivity solution that you can run in the cloud or on-premises. Data Pipeline can give you access to data in the cloud or on-premises behind a firewall. Connect through a standard interface—SQL (ODBC, JDBC) or REST (OData).

Step 3:

Use the OData 2.0/4.0 endpoint from Hybrid Data Pipeline with Salesforce Lightning Connect to access your data in Salesforce.

Step 1 - Build a JDBC driver for your API using Progress OpenAccess SDK

To start with building your own JDBC driver for your custom internal API, please follow this short tutorial on how you can do it.

Build your own Custom JDBC driver for REST API in 2 Hours

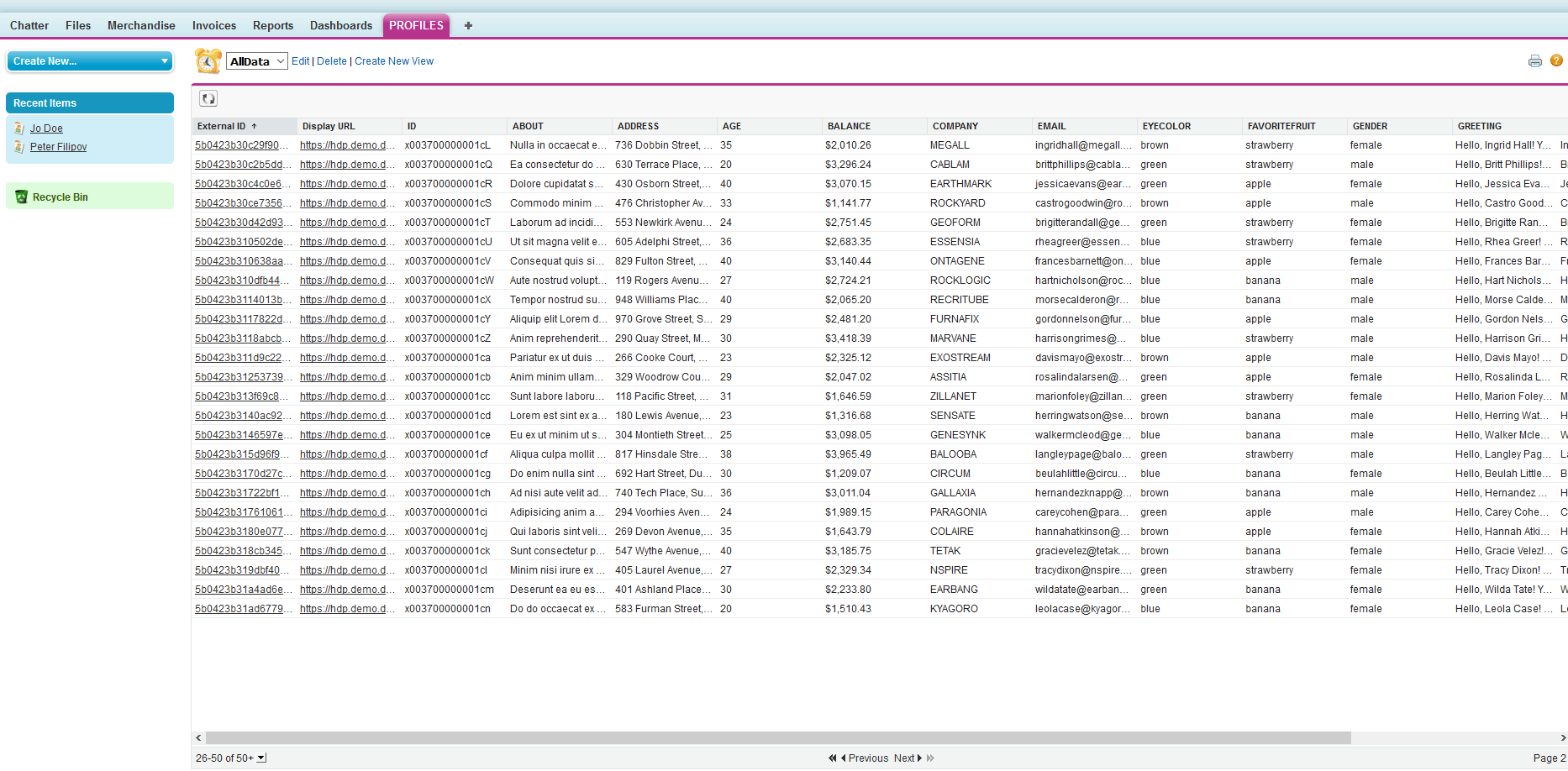

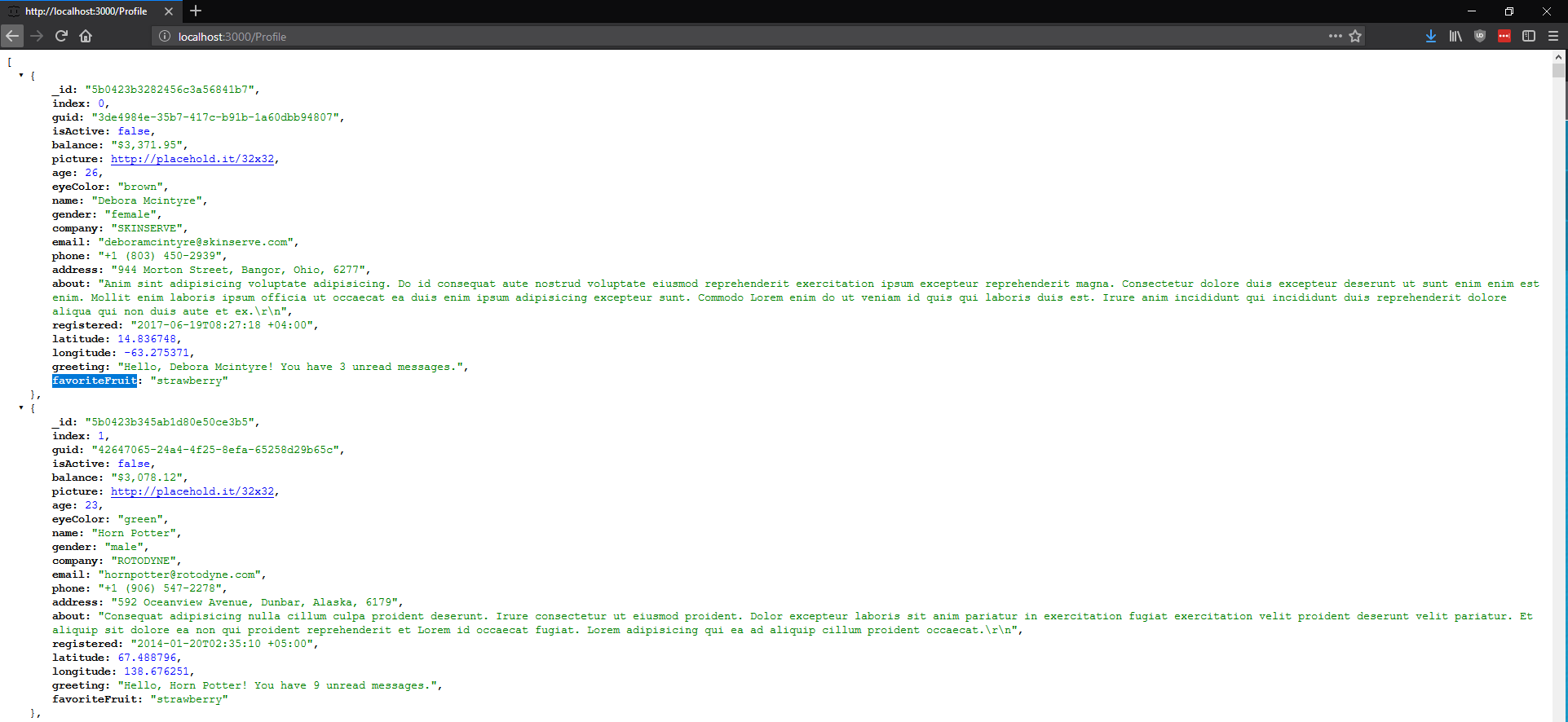

I built a JDBC driver for a REST API which lists sample profile data as shown below.

At this point, you can choose to use this JDBC driver with any analytical tools or data connectivity needs.

Step 2 – Configure OData endpoint using Hybrid Data Pipeline

Here is a short tutorial on how you can install Progress Hybrid Data Pipeline. You need to have a valid SSL certificate for the server address before you install Hybrid Data Pipeline. Here are the requirements for Hybrid Data Pipeline: PEM File Configuration

Although the instructions are for AWS, this should be applicable for any CentOS machine. After you have installed Hybrid Data Pipeline, by default you should be able to access it at https://<server>:8443.

We assume that this server is configured to be on your DMZ or behind a reverse proxy, so that Salesforce can connect to your server.

Enable JDBC feature

The first thing you need to do is open Postman and send a PUT request, to enable JDBC driver feature in Hybrid Data Pipeline. You need to authenticate using basic auth using the “d2cadmin” user you have configured while installing Hybrid Data Pipeline.

PUT https://{servername}/api/admin/configurations/5Authorization: Basic Base64{user:password}Body{ "id": 5, "description": "Enable JDBC DataStore, when value is set to true, JDBC DataStore will be enabled.", "value": "true"}

This will enable JDBC feature in Hybrid Data Pipeline.

Plugin the driver into HDP

To plug in the OpenAccess JDBC driver(oajc.jar) into HDP, take the driver and copy it to the following location

<install_root>/ddcloud/keystore/drivers>

Now Stop and Start the Hybrid Data Pipeline Server by running the scripts as shown below in Hybrid Data Pipeline install folder.

$pwd/home/users/<user>/Progress/DataDirect/Hybrid_data_Pipeline/Hybrid_Server/ddcloud$ ./stop.sh$ ./start.sh

Configure your Custom JDBC driver in Hybrid Data Pipeline

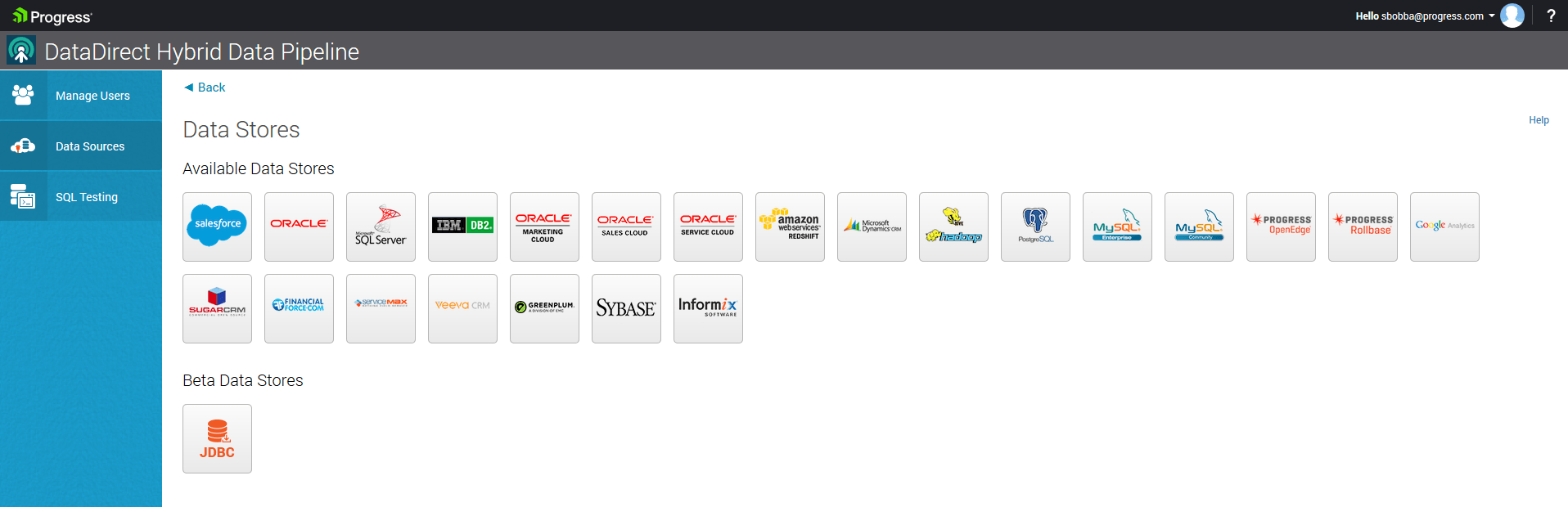

- After you have re-started the server, go to https://<server>:8443 to access the web ui. Go to DataSources tab and create a New Data Source. Choose JDBC data store.

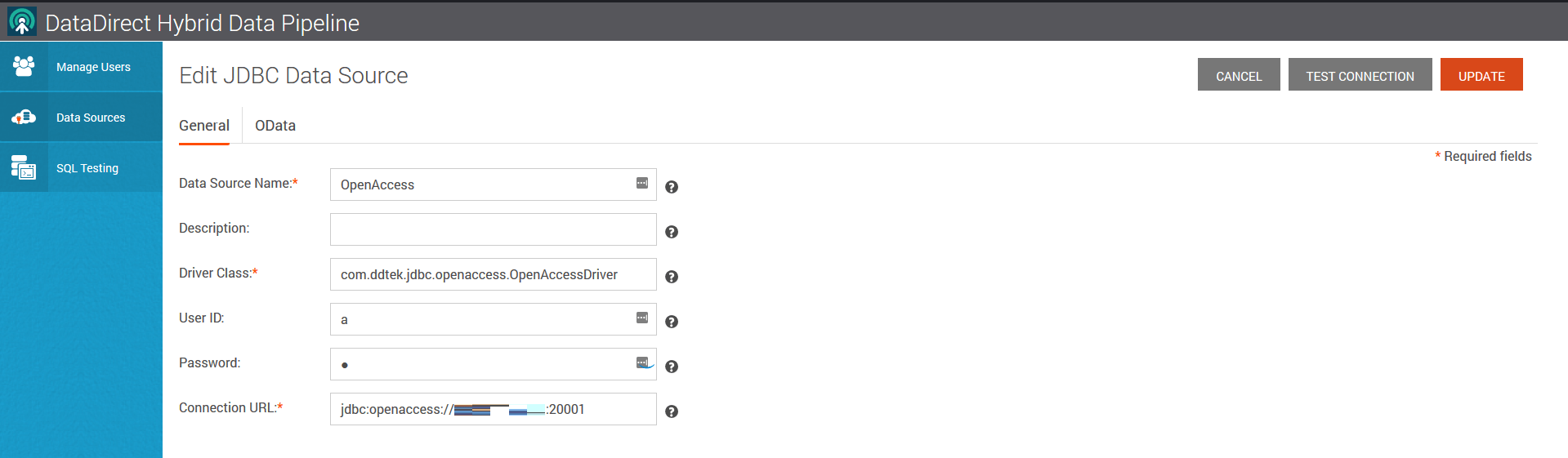

- Configure the JDBC Data Source as shown below to connect to your OpenAccess Service that you have written previously

- Click on Test Connection and you should be able to connect to your OpenAccess Service.

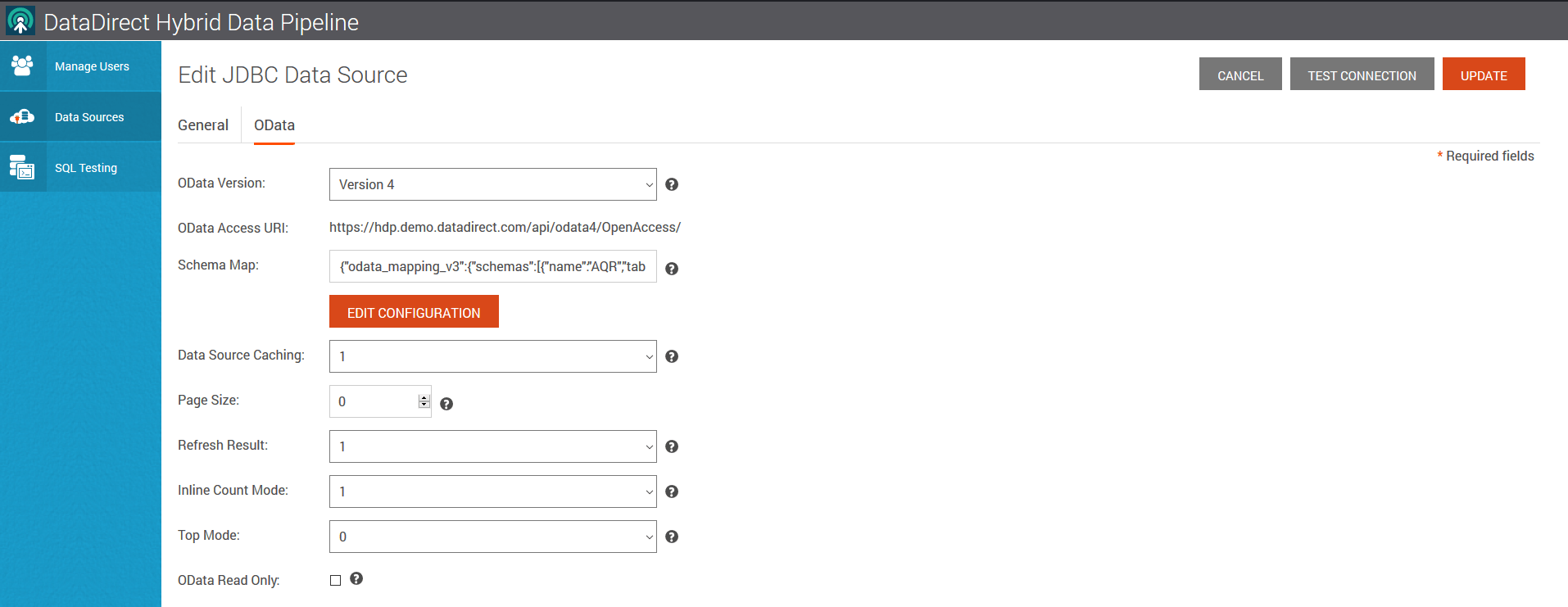

- Go to OData tab and click on Configure Schema button. You should now see list of schemas that you would have configured when building the JDBC driver and choose the one you want.

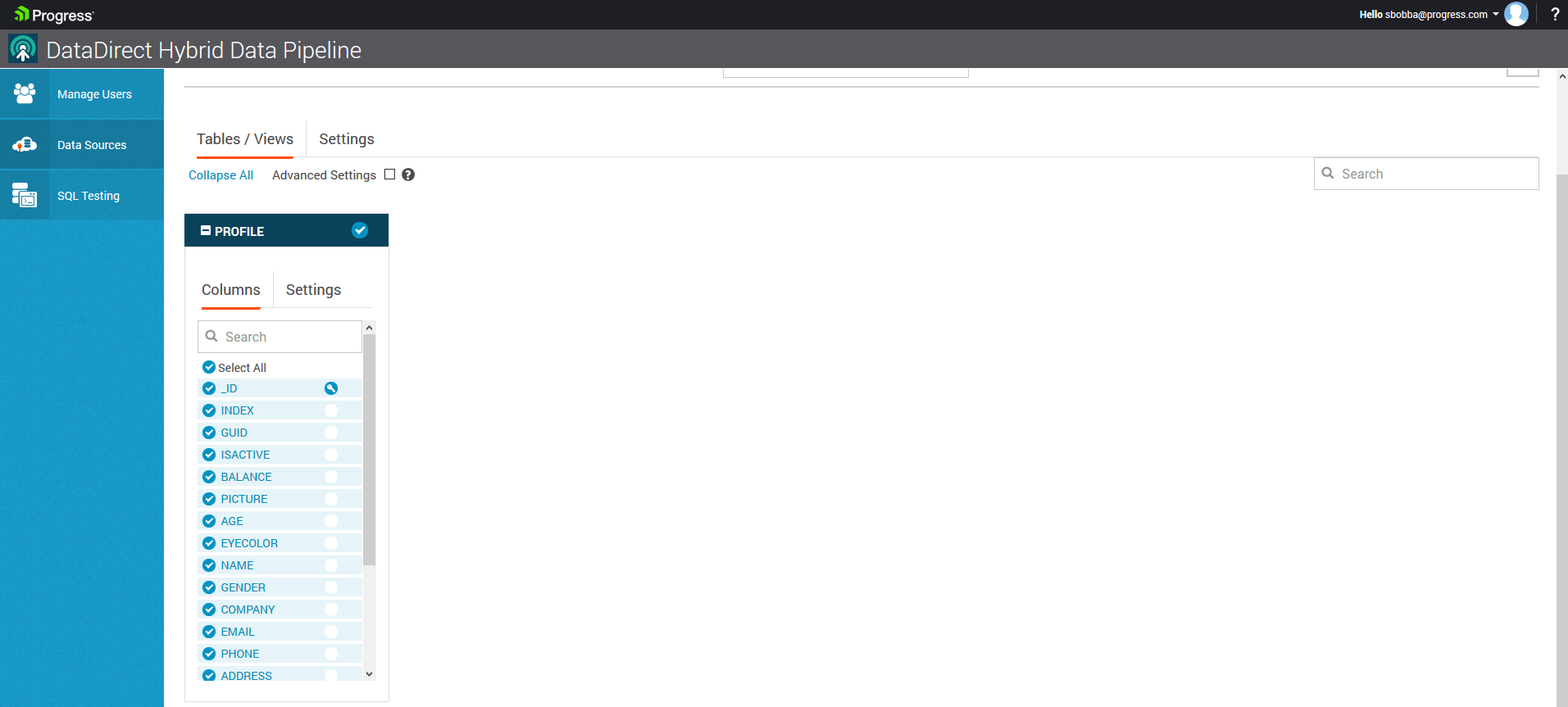

- Once you have selected it, you should see list of tables as shown below. In my case, I have only one table that’s based on my Profiles REST API endpoint.

- On the OData tab, you should find your OData 4.0 endpoint URL as shown below

- Choose a Primary Key column for your table, select it and click on Save and Close.

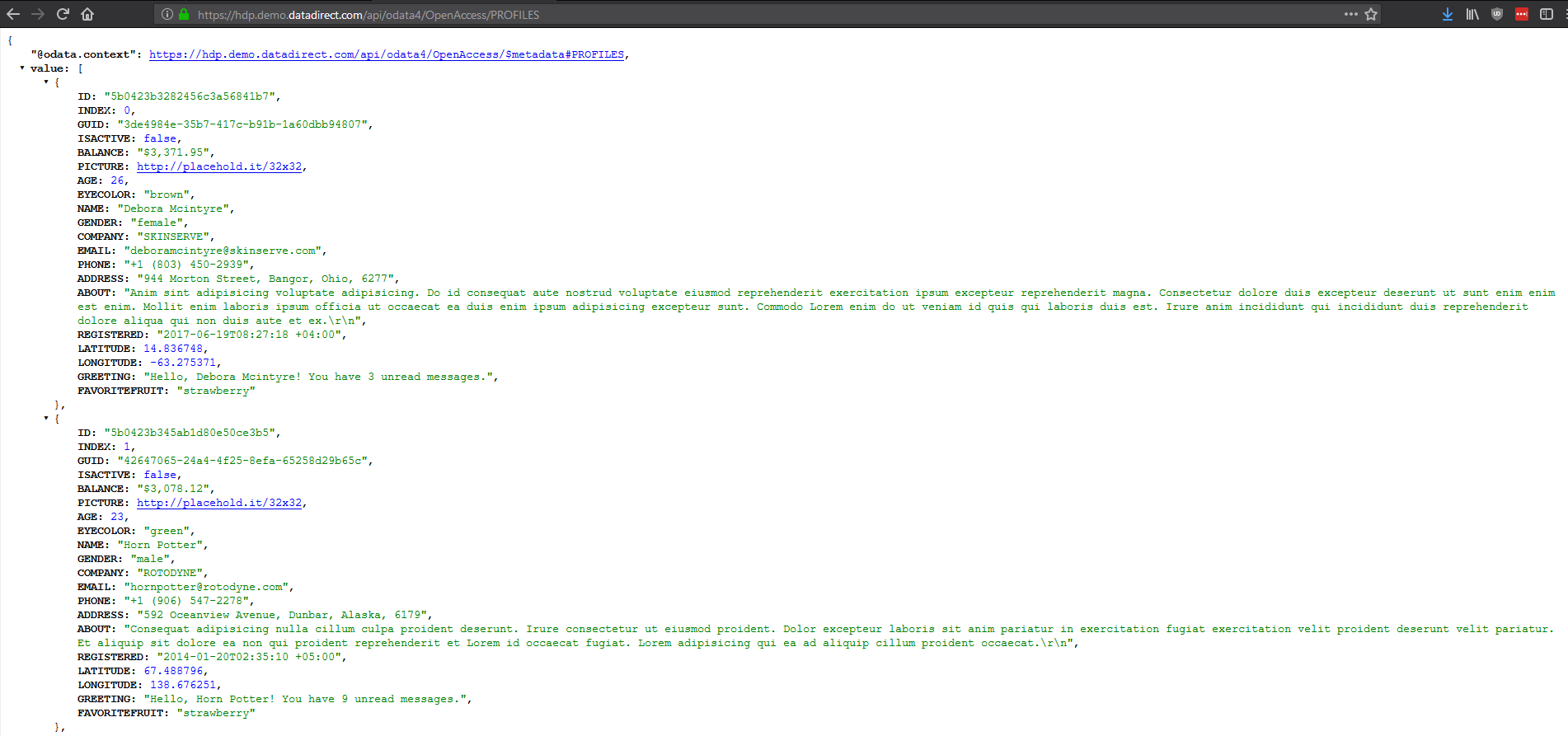

- Copy it and open in a new tab and you should see your REST API as an OData 4.0 API as shown below.

Step 3: Access your REST API From Salesforce

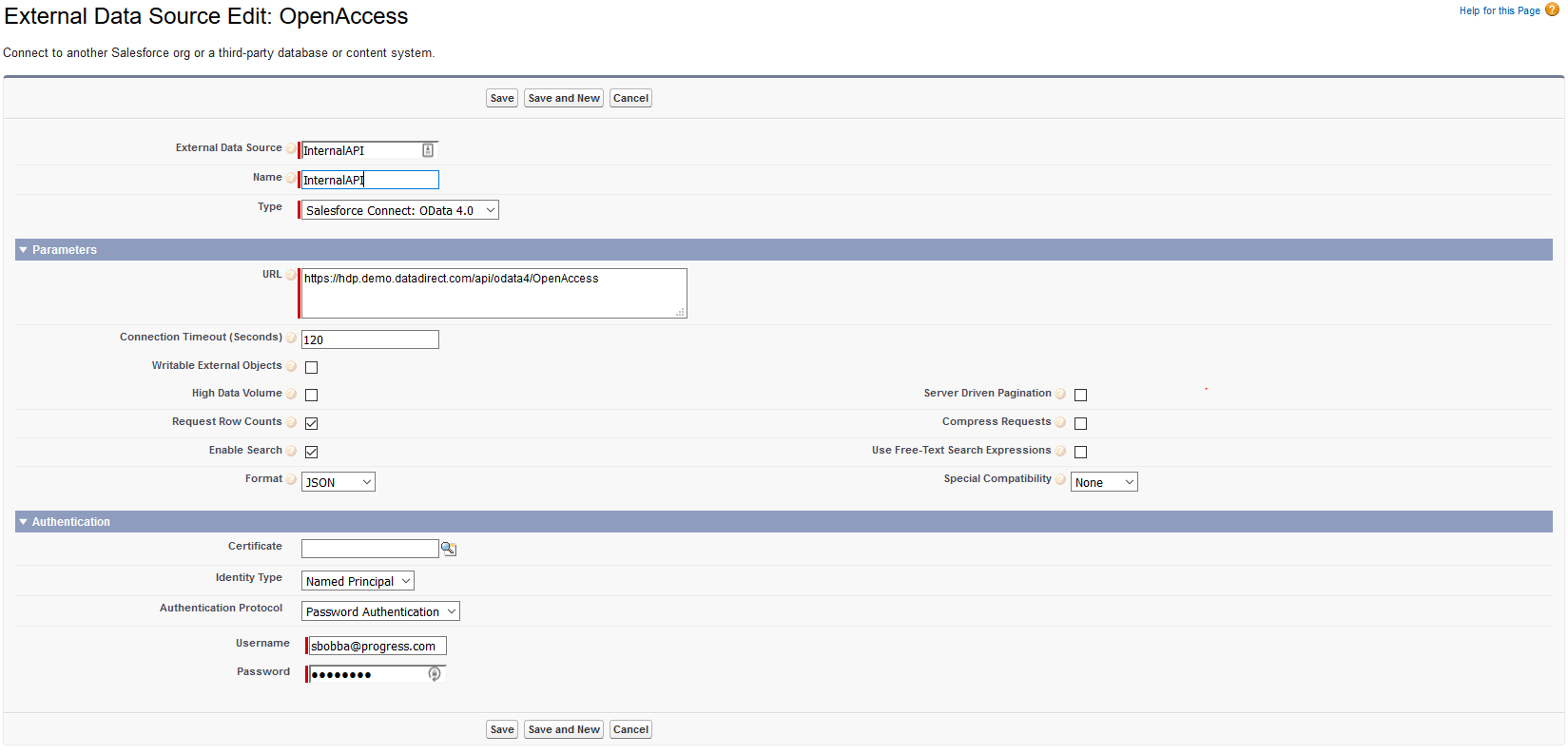

- Log in to Salesforce, Go to Develop -> External Data Source and Create a new Data Source and configure it as shown below.

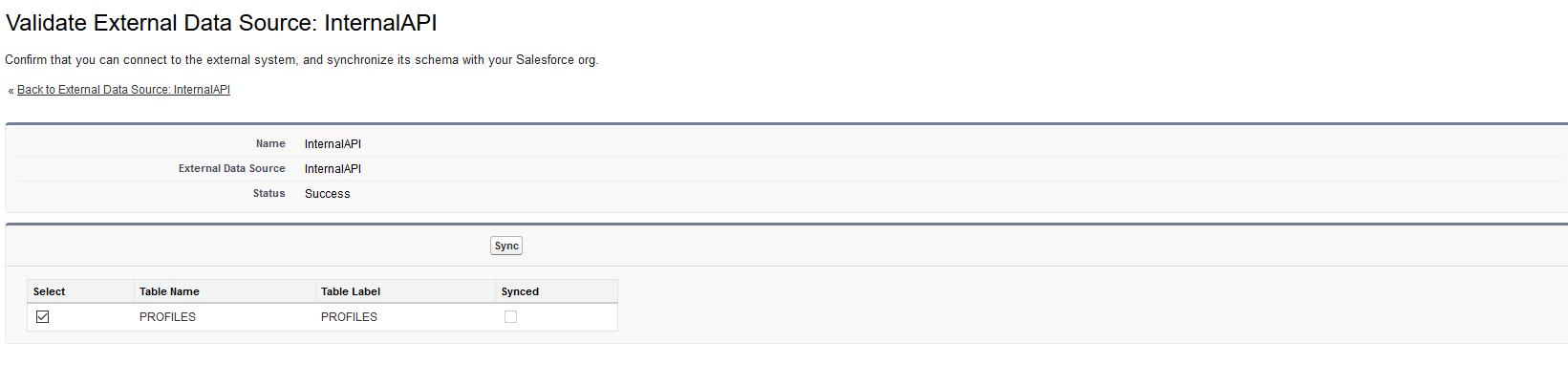

- Click on Save button after you have configured the External Data Source. On the next screen, click on Validate and Sync.

- On the next screen, choose the tables that you want to sync. In my case, I have only one table, which is the Profiles table and am going to select and Click on Sync

- You should now be able to access your data from your internal REST API from Salesforce.